Text Summarization with LLM

Introduction

In my previous post, I have discussed about the summarization task in NLP, which is a very interesting topic. In this writing, I will implement the task using LangChain and HuggingFace. The structure of the blog is organzed as follow:

- Preliminary: I remind knowledge about the summarization task, also, I introduce LangChain and HuggingFace.

- Code Implementation: I conduct my implementation on the topic.

- Conclusion.

Preliminary

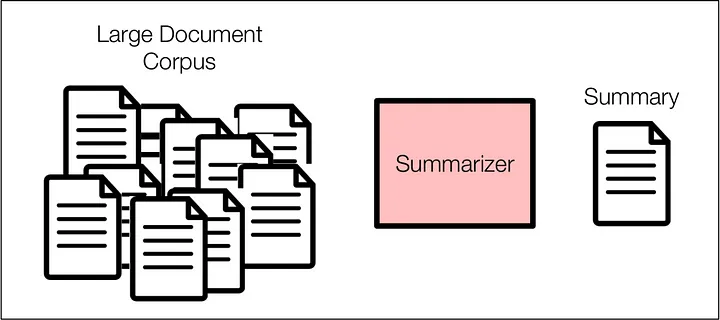

Summarization task in NLP

Summarization in NLP condenses long texts into brief versions, extracting important elements while retaining meaning.

Summarization in NLP condenses long texts into brief versions, extracting important elements while retaining meaning.

There are two basic approaches: extractive, which chooses existing sentences, and abstractive, which condenses and rephrases to create a unique summary. In addition to extracting keywords, summary seeks to preserve the original text’s flow, coherence, and general structure.

Summaries, which range from news to research articles, provide brief overviews that improve information accessibility and understanding.

LangChain 🦜 🔗 — such a great tool to develop applications that enable LLM.

Orchestrate your AI dreams! This Python and JavaScript open-source framework simplifies building powerful applications powered by large language models (LLMs). Let’s think about modular building blocks — combine language prompts, data access, and LLMs in flexible chains to unlock diverse tasks like question answering, summarization, and even code analysis.

Orchestrate your AI dreams! This Python and JavaScript open-source framework simplifies building powerful applications powered by large language models (LLMs). Let’s think about modular building blocks — combine language prompts, data access, and LLMs in flexible chains to unlock diverse tasks like question answering, summarization, and even code analysis.

Notably, no Coding Wizardry Needed. To be more specific, Embrace user-friendly APIs and abstractions — even without deep NLP expertise, build and customize AI applications tailored to your needs. Furthermore, LangChain empowers experimentation and analysis. Alsom track performance metrics, compare optimizers, and gain insights into how different LLMs handle data and solve problems.

From my perspective, LangChain unchains the potential of LLMs for real-world applications. From chatbots to research assistants, LangChain paves the way for innovative AI solutions.

HuggingFace🤗

It democrates AI, one open-source model at a time. You can explore thousands of pre-trained AI models for diverse tasks, ready to use with user-friendly tools and vibrant communities.

It has a wide range of libraries that support not only NLP-related topics but also Computer Vision, Audio Processing. With that, you can build chatbots, generate text, translate languages, or analyze images — unleash the power of AI without being an expert.

Code Implementation

To begin with, let’s setup the environment and implement neccesary libraries.

!pip install -q -U transformers peft accelerate optimum

!pip install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/cu117/

!pip install langchain

!pip install einops

import time

import numpy as np

import math

import evaluate

from sklearn.metrics.pairwise import euclidean_distances, cosine_similarity

import torch

import transformers

from transformers import AutoTokenizer, AutoModelForCausalLM

from langchain import LLMChain, HuggingFacePipeline, PromptTemplate

Then, I define the application’s configuration. In this setting, I used quantized version of Llama-2 by the Bloke (I suggested several version of its, you can try those iteratively). In the below code block, you can see the temperature hyperparameter. It is in charge of allowing LLM to be creative in generating answers. In other words, this option allows you to alter the model’s “creativity”. The model’s creativity increases as the temperature rises. If the temperature is set to zero, the model will always generate the highest probability response, which will be nearly the same for each query.

Model quantization is a magic trick for AI models: it shrinks their size while boosting their speed. By using less memory and processing power, these leaner models excel on resource-constrained devices and real-time applications. Get ready to unlock faster, more efficient AI without sacrificing accuracy!

config={

"model_id": ["TheBloke/Llama-2-7b-Chat-GPTQ",

"TheBloke/Llama-2-7B-AWQ",

"TheBloke/Llama-2-7B-GGUF",

"TheBloke/Llama-2-7B-GGML",

"TheBloke/Llama-2-7B-fp16",

"TheBloke/Llama-2-7B-GPTQ",

"TheBloke/llama-2-7B-Guanaco-QLoRA-AWQ",

"TheBloke/Llama-2-7B-AWQ"],

"hf_token": "...",

"model": {

"temperature": 0.7, # [0, 0.7, .0.9, 1.1, 1.3] Testing iteratively.

"max_length": 3000,

"top_k": 10,

"num_return": 1

},

}

After that, I called the model and the tokenizer from HuggingFace by a generating function

# Defines a function to call the number of parameters of a model.

def call_parameter(model):

pytorch_total_params = sum(p.numel() for p in model.parameters())

trainable_params = sum(p.numel() for p in model.parameters() if p.requires_grad)

untrainable_params = pytorch_total_params - trainable_params

print(f'Model {model.__class__.__name__} has {pytorch_total_params} parameters in total\n'\

f'Trainable parameters: {trainable_params}\nUntrainable parameters: {untrainable_params}')

return pytorch_total_params

# ===== Start calling the model and the tokenizer.

def generate_model(model_id, config):

print(f"Setting up model {model_id}")

model = AutoModelForCausalLM.from_pretrained(model_id, use_safetensors=True,

device_map='auto', trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(model_id,

device_map='auto', trust_remote_code=True)

return model, tokenizer

model, tokenizer = generate_model(config['model_id'][0], config)

no_params = call_parameter(model)

print("====="*5)

print(f"Model {config['model_id'][0]} has {no_params} parameters.")

>>> """Model LlamaForCausalLM has 262410240 parameters in total

Trainable parameters: 262410240

Untrainable parameters: 0

=========================

Model TheBloke/Llama-2-7b-Chat-GPTQ has 262410240 parameters."""

Continue the process, I created a generator which used transformers.pipeline , which is a famous API of huggingface. I called HuggingFacePipeline from LangChain which took the pipeline and a defined template so that it would be able to perform the summarization task.

class Generator:

def __init__(self, config, agent, template):

self.agent = agent

pipeline = transformers.pipeline(

"text-generation",

model=self.agent.model,

tokenizer=self.agent.tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

max_length=config['model']['max_length'],

do_sample=True,

top_k=config['model']['top_k'],

num_return_sequences=config['model']['num_return'],

pad_token_id=tokenizer.eos_token_id

)

llm = HuggingFacePipeline(pipeline=pipeline, model_kwargs={'temperature': config['model']['temperature']})

prompt = PromptTemplate(template=template, input_variables=["text"])

self.llm_chain = LLMChain(prompt=prompt, llm=llm)

def generate(self, text):

result = self.llm_chain.invoke(text)

return result

# Defining template

template = """

Write a summary of the following text delimited by triple backticks.

Return your response which covers the key points of the text.

```{text}```

SUMMARY:

"""

# Defining an agent

agent = Agent(model, tokenizer)

llm_agent = Generator(config, agent, template)

After calling necessary components, you are able to perfrom the task.

text = """

PUT YOUR CONTENT HERE!!!

"""

llm_agent.generate(text)

Conclusion

Summarization task becomes well-known and believe to be a game changer in the NLP field. With the help of LangChain along with HuggingFace, its implementation becomes easier and more accessible.

Thank you for reading this article; I hope it added something to your knowledge bank! Just before you leave:

👉 Be sure to click the like button and follow me. It would be a great motivation for me.

👉The implementation refers to Colab

All rights reserved