Tìm hiểu "TinyLlama: An Open-Source Small Language Model"🦙

TinyLlama là mô hình ngôn ngữ 1,1B nhỏ gọn, được đào tạo trước trên 1 nghìn tỷ token. Xây dựng trên Llama 2, nó tận dụng những tiến bộ của cộng đồng nguồn mở như FlashAttention để có hiệu quả tính toán tốt hơn. Mặc dù có kích thước nhỏ nhưng TinyLlama vẫn vượt trội so với các mô hình nguồn mở hiện có.

Giới thiệu

Theo những gì mình đã thấy, trong lĩnh vực xử lý ngôn ngữ tự nhiên (NLP), mọi người có xu hướng mở rộng quy mô mô hình ngôn ngữ. Bước này được thúc đẩy bởi thực tế là các Mô hình ngôn ngữ lớn (LLM) được đào tạo trước trên kho văn bản lớn đã thể hiện thành công trong nhiều nhiệm vụ khác nhau. Tuy nhiên, để một mô hình có thể được phát triển rộng rãi và ứng dụng mạnh mẽ (đặc biệt là tích hợp trên các thiết bị phần cứng) thì kích thước của nó phải nhỏ, nhẹ và đảm bảo độ chính xác tương đối.

Có hai điều cần cân nhắc về luật chia tỷ lệ được trình bày trong [1] khiến mình cân nhắc việc đào tạo một mô hình nhỏ hơn với tập dữ liệu lớn hơn:

- Các mô hình nhỏ hơn có thể hoạt động tốt như các mô hình lớn hơn khi được đào tạo với nhiều dữ liệu hơn [3].

- Các nguyên tắc mở rộng quy mô hiện tại có thể không dự đoán được kết quả chính xác khi các mô hình nhỏ hơn được huấn luyện trong thời gian dài hơn [4].

TinyLlama được thúc đẩy bởi những khám phá này và tập trung vào việc điều tra hành vi của các mô hình nhỏ hơn khi được đào tạo với nhiều mã thông báo hơn đáng kể so với quy tắc chia tỷ lệ gợi ý. Đó là mô hình ngôn ngữ 1,1 tỷ từ đã được đào tạo trước trên 1 nghìn tỷ mã thông báo trong ba kỷ nguyên. Xây dựng trên Llama 2, nó tận dụng các cải tiến của cộng đồng nguồn mở như FlashAttention để cải thiện hiệu quả tính toán. Mặc dù có kích thước khiêm tốn nhưng nó hoạt động tốt hơn các mô hình nguồn mở khác.

Đóng góp

- Giới thiệu TinyLlama, mô hình ngôn ngữ quy mô nhỏ, mã nguồn mở.

- Để tăng tính cởi mở trong cộng đồng đào tạo trước LLM nguồn mở, các tác giả đã cung cấp tất cả thông tin cần thiết, bao gồm mã đào tạo trước, điểm kiểm tra mô hình trung gian và quy trình xử lý dữ liệu.

- Kiến trúc nhỏ và hiệu suất đầy hứa hẹn của TinyLlama khiến nó trở nên lý tưởng cho các ứng dụng di động để thử nghiệm các mô hình ngôn ngữ mới.

Source code của TinyLlama có thể tìm thấy ở đây: https://github.com/jzhang38/TinyLlama?source=post_page-----66e747f181fb--------------------------------

Thí nghiệm

Bộ dữ liệu

Mục tiêu của TinyLlama là làm cho quá trình đào tạo trước trở nên hiệu quả và có thể lặp lại. Tác giả sử dụng kết hợp ngôn ngữ tự nhiên và dữ liệu mã để đào tạo trước. Các bộ dữ liệu mà họ sử dụng là:

- SlimPajama: tập dữ liệu này được tạo ra bằng cách trải nghiệm quá trình làm sạch tập dữ liệu tổ tiên của nó, cụ thể là RedPajama. Kho dữ liệu RedPajama ban đầu là một nỗ lực nghiên cứu nguồn mở nhằm mục đích tái tạo dữ liệu đào tạo trước của Llama chứa hơn 1,2 nghìn tỷ mã thông báo.

- Starcodedata: Tập dữ liệu này bao gồm khoảng 250 tỷ mã thông báo từ 86 ngôn ngữ lập trình. Ngoài mã, nó còn chứa các vấn đề về GitHub và các cặp mã văn bản sử dụng ngôn ngữ tự nhiên.

Sau khi tích hợp hai tập đoàn này, tập dữ liệu huấn luyện chứa tổng cộng khoảng 950 tỷ mã thông báo trước khi đào tạo. TinyLlama được đào tạo về các token này trong khoảng ba kỷ nguyên (3 epochs). Trong quá trình đào tạo, tác giả lấy mẫu dữ liệu ngôn ngữ tự nhiên để đạt được tỷ lệ dữ liệu ngôn ngữ-mã tự nhiên khoảng 7:3.

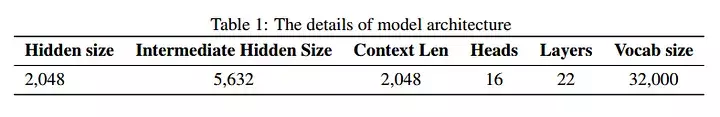

Kiến trúc mô hình

TinyLlama áp dụng kiến trúc mô hình giống hệt với Llama 2, cụ thể là kiến trúc Transformer.

Cụ thể, nó sử dụng:

Cụ thể, nó sử dụng:

- Nhúng vị trí (Positional Embedding): sử dụng RoPE (Nhúng vị trí quay-Rotary Positional Embedding) để đưa thông tin vị trí vào.

- RMSNorm: để cải thiện độ ổn định trong quá trình huấn luyện, quá trình chuẩn hóa trước bao gồm việc chuẩn hóa đầu vào trước mỗi lớp con máy biến áp.

- SwiGLU: kết hợp đơn vị tuyến tính Swish và Gated (GLU) - đó là lý do tại sao nó được đặt tên là SwiGLU- làm chức năng kích hoạt.

- Truy vấn theo nhóm chú ý (Grouped-query Attention): Để giảm chi phí băng thông bộ nhớ và tăng tốc độ suy luận. Cụ thể hơn, các tác giả cho biết họ có 32 đầu mục chú ý truy vấn và 4 nhóm đầu khóa-giá trị. TinyLlama có thể sử dụng kỹ thuật này để phân phối các biểu diễn khóa và giá trị trên nhiều đầu trong khi vẫn duy trì hiệu suất cao.

Quá trình huấn luyện

Sau đó, các tác giả triển khai một số phương án để tối ưu hóa tốc độ của mô hình:

- Nguồn của tác giả tích hợp Song song dữ liệu được phân chia hoàn toàn (Fully Sharded Data Parallel-FSDP) để đào tạo đa GPU và đa nút hiệu quả. Việc tích hợp nhiều nút điện toán giúp nâng cao tốc độ và hiệu quả đào tạo.

- Các tác giả sử dụng FlashAttention để tăng thông lượng tính toán.

Nền tảng của mô hình dựa trên lit-gpt. Trong giai đoạn tiền đào tạo, nó được sử dụng với mục tiêu mô hình hóa ngôn ngữ tự động hồi quy (an auto-regressive language modeling goal). Cụ thể hơn, họ sử dụng trình tối ưu hóa AdamW, phù hợp với các tham số của Llama 2. Họ cũng sử dụng lịch trình tốc độ học cosine ( cosine learning rate schedule) với tốc độ học tối đa là 4,0 × 10−4 và tối thiểu là 4,0 × 10−5. Họ sử dụng 2.000 bước khởi động để tối đa hóa việc học và đặt quy mô lô thành 2 triệu mã thông báo. Họ điều chỉnh mức giảm trọng số (weight decay) thành 0,1 và sử dụng ngưỡng cắt độ dốc là 1,0 để kiểm soát giá trị độ dốc.

Triển khai thuật toán

Điều thú vị về các mô hình nhỏ là chúng đủ nhỏ để thiết lập trên dịch vụ đám mây và không yêu cầu GPU để suy luận. Trong phạm vi bài viết này, mình chỉ sử dụng TinyLlama làm chatbot và minh họa nó vào Colab-Notebook. Tuy nhiên, mình mong đợi sẽ thực hiện được nhiều nhiệm vụ sáng tạo hơn với mô hình thú vị này.

Để bắt đầu, chúng tôi sẽ cài đặt và nhập các thư viện cần thiết cho mã của mình. Chúng tôi sử dụng TinyLlama/TinyLlama-1.1B-Chat-v1.0. Đây là checkpoint mới nhất của TinyLlama trên HuggingFace.

# Intall libraries

!pip install git+https://github.com/huggingface/transformers

!pip install -q -U accelerate

# Import libraries

import torch

import transformers

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers import pipeline

# Call out model name

model_id = 'TinyLlama/TinyLlama-1.1B-Chat-v1.0'

Huggingface giới thiệu các mẫu dành cho mô hình trò chuyện. Đối với tôi, nó thân thiện với người dùng. Có 2 cài đặt cho việc này:

- Mẫu sẽ được thực hiện từng bước.

- Mẫu sẽ được thực hiện bằng một đường ống (pipe).

Thực hiện từng bước

Trước hết, chúng ta tải thẻ của mô hình xuống từ HuggingFace. Để làm như vậy, chúng tôi làm theo dòng mã này

model = AutoModelForCausalLM.from_pretrained(model_id)

tokenizer = AutoTokenizer.from_pretrained(model_id)

Sau đó, chúng ta xác định vai trò cho mô hình. Theo quan điểm của mình, bước này giống như tạo cho người mẫu một bản sắc, làm cho nó trở nên “sống động” hơn.

# Check the tokenizer's chat template to format each message - see https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a content creator who is in love with technology that always repond as a geek style.",

},

{"role": "user", "content": "How to increase views for my content?"},

]

Tiếp theo, gửi nó tới phương thức apply_chat_template(). Sau khi hoàn thành việc đó, chúng ta sẽ có đầu ra sẵn sàng hoạt động! Khi sử dụng mẫu trò chuyện làm đầu vào tạo mô hình, bạn cũng nên sử dụng add_generation_prompt=True để bao gồm lời nhắc tạo mô hình.

tokenized_chat = tokenizer.apply_chat_template(messages, tokenize=True,

add_generation_prompt=True,

return_tensors="pt")

print(tokenizer.decode(tokenized_chat[0]))

"""

<|system|>

You are a content creator who is in love with technology that always repond as a geek style.</s>

<|user|>

How to increase views for my content?</s>

<|assistant|>

"""

Giờ đây, dữ liệu đầu vào của chúng ta đã được chuẩn bị đúng cách cho TinyLlama, chúng ta có thể sử dụng mô hình này để đưa ra câu trả lời cho câu hỏi của người dùng.

outputs = model.generate(tokenized_chat, max_new_tokens=128,

do_sample=True,

temperature=0.7,

top_k=50,

top_p=0.95)

print(tokenizer.decode(outputs[0]))

"""

<|system|>

You are a content creator who is in love with technology that always repond as a geek style.</s>

<|user|>

How to increase views for my content?</s>

<|assistant|>

Here are some ways to increase views for your content:

1. Create high-quality content: Make sure your content is engaging, informative, and relevant to your target audience.

2. Optimize your content for SEO: Ensure your content is optimized for search engines by including relevant keywords in your title, description, and content.

3. Use social media: Share your content on social media platforms like Twitter, Facebook, and Instagram to reach a wider audience.

4. Promote your content: Leverage social media platforms to promote your content and drive traffic to your website.

"""

Thực hiện với pipe

Quy trình tạo cuộc trò chuyện với pipeline của Huggingface cho phép nhập nội dung trò chuyện, giúp việc phát triển mô hình trò chuyện trở nên cực kỳ dễ dàng.

pipe = pipeline("text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

device_map="auto")

messages = [

{

"role": "system",

"content": "You are a content creator who is in love with technology that always repond as a geek style.",

},

{"role": "user", "content": "How to increase views for my content?"},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipe(prompt, max_new_tokens=128,

do_sample=True,

temperature=0.7,

top_k=50,

top_p=0.95)

print(outputs[0]["generated_text"])

"""

<|system|>

You are a content creator who is in love with technology that always repond as a geek style.</s>

<|user|>

How to increase views for my content?</s>

<|assistant|>

Here are some tips to increase views for your content:

1. Optimize your content: Make sure your content is optimized for search engines. Use descriptive and relevant keywords, use headings and subheadings to break up your content, and add meta descriptions to your articles.

2. Share your content on social media: Share your content on your social media channels, including Facebook, Twitter, LinkedIn, and Instagram. This will help increase visibility and reach your target audience.

3. Use social media advertising: Use social media advertising to reach a wider audience. Platforms like Facebook

"""

Chú ý: Chúng ta có thể thấy rằng kết quả của hai lần triển khai khác nhau đáng kể. Đây không phải là một sai sót; nó chỉ đơn giản là một tính năng trong đó chúng ta thay đổi nhiệt độ (temperature), cho phép mô hình tăng khả năng sáng tạo của nó trong mỗi lần ta tương tác với nó.

Tham khảo

- Zhang et al. — TinyLlama: An Open-Source Small Language Model— URL: https://arxiv.org/abs/2401.02385

- Jordan Hoffman et al. — Training Compute-Optimal Large Language Models — URL: https://arxiv.org/abs/2203.15556

- Thaddée, Y. T — Chinchilla’s death — URL: https://espadrine.github.io/blog/posts/chinchilla-sdeath.html.

- Hugging Face — Templates for chat models — URL: https://huggingface.co/docs/transformers/main/en/chat_templating

All rights reserved