Deep Learning

Chuyện là mình có tập dữ liệu âm thanh. Mình đã rút trích đặc trưng theo MFCC và cho huấn luyện mô hình.

Mình huấn luyện mô hình

import IPython.display as ipd

from scipy.io import wavfile

import os

import pandas as pd

import librosa

import glob

import librosa.display

import matplotlib.pyplot as plt

import random

import numpy as np

import time

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D

from keras.optimizers import Adam

from sklearn import metrics

from sklearn.preprocessing import LabelEncoder

from keras.utils import np_utils

#doc du lieu

temp1 = pd.read_csv('detached1.csv')

y1 = np.array(temp1.label.tolist())

X1 = np.array(temp1.feature.tolist())

lb = LabelEncoder()

y1 = np_utils.to_categorical(lb.fit_transform(y1))

#huan luyen mo hinh

num_labels = y1.shape[1]

model = Sequential()

model.add(Dense(256, input_shape=(40,)))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(256))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(num_labels))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy', metrics=['accuracy'], optimizer='adam')

model.fit(X1, y1, batch_size=32, epochs=200, validation_split=0.1, shuffle=True)

Thì xảy ra lỗi:

Using TensorFlow backend.

Traceback (most recent call last):

File ".\train.py", line 119, in <module>

model.fit(X1, y1, batch_size=32, epochs=200, validation_split=0.1, shuffle=True)

File "C:\Python36\lib\site-packages\keras\engine\training.py", line 950, in fit

batch_size=batch_size)

File "C:\Python36\lib\site-packages\keras\engine\training.py", line 749, in _standardize_user_data

exception_prefix='input')

File "C:\Python36\lib\site-packages\keras\engine\training_utils.py", line 137, in standardize_input_data

str(data_shape))

ValueError: Error when checking input: expected dense_1_input to have shape (40,) but got array with shape (1,)

Đây là tập dữ liệu mà mình đã rút trích MFCC theo từng nhãn:

feature,label

"[-4.27537437e+02 1.84112623e+01 -5.08398999e+01 5.31637161e+00

-2.10733792e+01 -1.12434670e+01 -1.80090488e+01 -5.75498212e+00

-6.93904306e-01 -3.86425538e-01 -1.34887390e+01 -4.86926960e+00

7.08283909e+00 2.14888911e+01 4.27220821e+00 2.68281675e+00

-7.48988856e+00 -4.83437475e+00 -4.97837031e+00 4.75456743e+00

3.78830787e+00 2.42193371e+00 -5.37803284e-01 1.91941476e+00

-1.30461991e+00 -5.38917506e+00 2.95554388e+00 7.73115650e+00

7.86978456e+00 6.78713944e+00 4.96348127e+00 -5.65352412e+00

-8.27031422e+00 -2.86780917e-01 2.48317310e+00 -2.81628395e+00

-7.71405737e+00 -2.17011549e+00 1.39035042e+00 5.37204073e+00]",Hi-hat

"[-3.38268402e+02 9.04193385e+01 -6.23142458e+01 1.51335214e+01

-1.79282013e+01 -1.14170883e+01 -1.44048668e+01 -7.91434938e+00

-2.60386278e+01 -1.33425042e+01 -1.95374448e+01 -7.54015238e+00

-9.25282725e+00 -1.42761031e+01 -8.95404860e+00 -1.19382583e+01

-1.71928449e+00 -5.69528325e+00 -3.24685922e+00 -5.55717358e-01

-2.90710922e+00 -3.16496626e-01 2.31073633e+00 7.50894389e+00

8.43927660e+00 1.55867110e+00 -2.81710750e-01 7.12674402e-01

5.41010774e+00 8.03800790e+00 8.17677948e+00 5.93762669e+00

4.77439264e+00 9.63560502e+00 1.16040133e+01 1.08942222e+01

8.61317843e+00 5.05467430e+00 5.23978546e-01 -5.62048446e+00]",Saxophone

"[-6.24420361e+02 1.05825675e+02 1.33219059e+00 8.52950436e+00

-7.24284650e+00 9.61647224e+00 -1.01478913e+01 1.54396490e+01

-5.98731125e+00 1.84971132e+01 8.43774569e+00 1.68807995e+01

6.56443439e+00 1.19779162e+01 -6.25981335e+00 9.84080965e+00

-5.64382929e+00 2.58264885e+00 -2.00159095e+00 7.13945232e+00

6.38080413e+00 2.79037469e+00 4.53217316e+00 3.97428187e+00

1.43712071e+00 3.41697916e+00 -1.55604338e+00 -1.93967453e+00

-5.66032483e+00 -4.18978478e+00 -1.28271250e+00 -1.14015339e+00

-1.31668160e+00 5.41810899e-01 -2.46240243e+00 1.39396516e+00

-9.86597523e-02 -4.03033817e+00 -4.41016969e+00 -1.98476223e+00]",Trumpet

"[-764.52938314 8.71873263 -6.43843907 -15.01001293 -11.87936678

4.85713407 14.52481245 16.67583444 0.828503 -13.2422332

-8.63103285 -2.09927411 12.74072178 17.04830133 3.82904079

-4.54364402 -12.89317423 -2.72160362 4.6501101 13.58426343

12.71656223 -3.82231561 -9.36074789 -4.471898 0.80438819

11.11886213 9.47849708 5.23002199 -6.7693081 -9.3544149

1.98950657 4.5134517 11.00089496 5.36468628 -2.9164897

-4.27201551 -3.70712954 4.4849342 6.91849163 5.93127321]",Glockenspiel

"[-317.20211467 94.64278976 -29.87401214 24.54868907 -6.09035615

-0.59327219 -6.94212706 20.30568222 -7.34163477 13.18906205

1.63050593 4.11504883 -8.83981319 -9.26372591 -4.08433343

0.7372643 -3.76954889 0.47261775 -6.12578635 -2.8851219

5.83740187 -2.93667644 -10.85349448 -17.17998129 -9.75256509

2.82462774 9.73483052 35.35451003 43.11077942 24.04688464

1.16382936 -15.37443287 -13.61991705 -0.33005041 17.23820257

6.06095399 -3.37540842 -12.25133004 -1.37950356 11.89579574]",Cello

"[-3.85405043e+02 1.82486473e+02 -2.33892432e+00 3.33028570e+01

1.81593151e+01 -9.75835474e-01 -6.42208460e+00 3.98433223e-02

2.95680088e+00 -1.12593921e+00 -2.26398369e+00 -2.32506312e+00

-2.47300684e+01 -9.01468486e-01 -7.79015057e+00 -4.05737105e+00

-1.14309429e+01 -1.89083919e+01 -1.25810084e+01 7.34797983e-01

2.68597806e+00 1.48029000e+00 -4.17226495e+00 -9.31283741e+00

-1.85426023e+00 -6.49108933e+00 1.14746636e+00 -2.65551034e+00

-8.37654899e+00 -3.26042608e+00 -1.94110840e+00 -8.57032086e+00

-2.65129743e+00 -1.32203655e+01 -1.84632703e+01 -8.26353278e+00

-1.88503566e+00 -8.35524375e+00 -1.62149390e+01 -1.82253732e+00]",Cello

"[-5.24920427e+02 5.94717202e+01 5.38309772e+00 1.26111679e+01

2.67003759e+00 8.02280542e+00 1.61448988e+00 6.37815119e+00

9.59449536e-01 6.12097763e+00 -2.49745585e-01 3.34996805e+00

-7.38250253e-01 1.50706689e+00 -2.10623382e-01 1.81277624e+00

-8.95322039e-01 3.65742001e+00 -6.42121196e-01 2.33773064e-01

1.05758933e+00 1.10403540e+00 9.32153897e-01 1.19968231e-01

2.08067929e+00 9.60565473e-02 7.88911438e-01 2.47309143e+00

3.21383146e+00 9.77452626e-02 -9.99862741e-02 5.02418510e-01

-3.06560278e-01 -4.77943679e-01 -1.30247224e+00 -1.57172712e+00

-3.50584169e+00 -3.74525384e+00 -1.26045936e+00 -2.23543676e+00]",Knock

"[-280.47011806 87.04624775 1.51867678 28.7544023 8.51132611

18.14832172 0.52928007 11.7828036 3.75681467 5.85570039

-3.73229661 -9.33423393 -9.80057998 -1.44813319 -9.81222806

-2.71234692 -7.2027108 -1.04184486 -4.2185896 -0.66948758

-5.41804403 -5.20026059 -4.9516565 -2.83758151 -3.87877157

-0.90021028 1.58843783 -0.96575431 -2.81714288 -1.31403267

-3.04378508 -4.39345396 -4.46993251 -4.4497167 -3.17821052

-4.55557494 -6.55964337 -3.58372651 -3.26926145 -3.99807356]",Gunshot_or_gunfire

"[-455.31825766 24.64795147 -59.7479186 6.57805275 -7.34159473

-0.75412168 -9.78392258 -6.14321207 -22.97267742 -11.33437337

-10.24463331 13.61014451 22.3439737 38.85788626 45.62329334

32.3592885 6.6244562 -13.46206869 -4.15417543 -0.56052938

4.28166291 -19.09338248 -32.89559066 -11.32265161 25.509636

22.46449093 3.71999159 18.30566019 39.33050818 27.53211081

-13.85470948 -16.00923342 8.10740382 7.70651575 -3.56247767

2.3622675 -3.03281754 -24.13676426 -2.25025972 31.24887203]",Clarinet

"[-4.16342246e+02 2.39453186e+01 8.25088021e+00 1.77851446e+01

-1.66942222e+01 2.56676681e+01 -1.37727697e+01 1.78291707e+01

-8.90931038e+00 1.61025820e+01 -9.29215844e+00 7.44784387e+00

-1.52496814e+01 8.44466390e+00 -1.13882392e+01 4.84605317e+00

-8.73099370e+00 6.03583024e+00 -8.63527381e+00 4.14996525e+00

-9.21384412e+00 -2.13100241e+00 -1.00770288e+01 -1.97386608e+00

-7.85499989e+00 -2.73425950e-01 -4.74793935e+00 -2.76770417e-01

-7.36556228e+00 -3.21462230e+00 -5.16336007e+00 -1.72343303e+00

-4.85442690e+00 -2.04850630e+00 -2.97923671e+00 5.15375491e-01

-3.80343525e+00 -1.62268470e+00 -4.35805247e+00 -1.16887440e+00]",Computer_keyboard

"[-5.19274594e+02 -2.71449943e+01 -2.83462511e+01 1.66401010e+01

-6.06738251e+00 1.47208344e+01 -1.07641763e+01 3.56846923e+00

-1.62394525e+01 2.58537802e+00 -4.56741501e+00 2.34174948e+00

-1.59728309e+01 7.78185987e-01 -1.29608242e+01 -3.86139441e+00

-6.90194615e+00 5.39548086e+00 -2.40081456e+00 3.20240751e+00

-3.26320455e+00 2.60146420e+00 1.02619756e+00 5.97824882e+00

-1.17367641e+00 1.66920391e+00 1.77947348e+00 7.43221466e+00

2.11138313e+00 5.34002900e+00 2.16695357e-01 1.53387821e-01

-3.44682113e+00 -1.31276163e+00 -2.47808711e+00 2.25855008e+00

-7.96137488e-01 -4.80598658e+00 -6.78338005e+00 -3.44156603e-01]",Hi-hat

"[-5.11254565e+02 5.10915827e+00 -3.91603563e+01 3.72906926e+01

-5.41286280e+00 2.45443313e+01 -1.01455891e+01 1.54803725e+01

3.11070726e-01 1.12446725e+01 -9.53414668e+00 1.25973173e+01

-6.58191956e+00 7.64917415e+00 -6.31176949e+00 5.63290461e+00

-2.15468728e+00 3.24888960e+00 3.58324877e-01 3.32424837e+00

-3.28164742e+00 5.00128487e+00 -2.21771250e+00 4.21021373e+00

-2.17697709e+00 6.51156093e+00 -2.44081838e+00 5.74518198e+00

-1.71610276e+00 5.09435083e+00 -1.42065432e+00 2.99195342e+00

-2.19029010e-01 2.91630452e+00 3.12646255e-01 2.46836080e+00

9.40062335e-01 2.88474875e+00 7.29112169e-01 1.91076338e+00]",Keys_jangling

"[-2.70970613e+02 4.41825471e+01 -2.71657128e+01 8.34881285e+00

-1.69852725e+01 4.41621599e-01 -1.36084170e+01 3.30848527e+00

-1.16992683e+01 2.50560480e+00 -1.27283351e+01 2.17467185e+00

-1.40992865e+01 -4.51612206e+00 -1.56652726e+01 -5.17631284e+00

-1.21148181e+01 -4.30362719e+00 -1.05397332e+01 -2.37359875e+00

-7.50444779e+00 -4.63788263e-01 -2.79868337e+00 4.91808954e-02

-6.10196649e+00 -9.46571464e-01 -4.50134621e+00 4.45210813e-01

-1.57192046e+00 -1.37422032e+00 -3.75160744e+00 -4.97624069e-01

-2.39860514e+00 2.13355452e-02 -3.14399860e+00 -3.41856172e+00

-3.71535928e+00 -1.67329311e+00 -2.28692094e+00 9.12734995e-01]",Snare_drum

"[-5.32791881e+02 5.53392756e+01 -4.23844965e+00 4.32248864e+01

-6.68890584e+00 2.10324813e+01 -6.68072132e+00 1.93206158e+01

-5.93869390e+00 1.00728757e+01 -1.20915412e+01 8.35914716e+00

-6.83334787e+00 1.04005723e+01 -1.00428315e+01 3.63580785e+00

-7.01152778e+00 5.48456909e+00 -4.12633407e+00 2.93005015e+00

-1.10928915e+00 4.44012759e+00 1.01764271e+00 -1.38631256e+00

2.33545777e+00 1.66915380e+00 -6.74666616e-01 -3.49031360e+00

1.15497161e+00 4.98827185e-01 -2.92391846e+00 1.82407029e-03

1.12726490e+00 -3.68309456e+00 -9.90012043e-01 1.02524714e+00

-2.01671215e+00 -1.18087267e+00 -6.58942356e-01 -1.04852341e-01]",Writing

"[-3.88631500e+02 1.36379931e+02 -1.77521786e+01 9.47604936e+00

1.07755710e+01 1.23910543e+01 -2.61411621e+00 1.80973210e+00

-5.35874886e+00 3.42813978e+00 -3.19641142e+00 -5.10204444e+00

-1.52943596e+01 -1.13649575e+01 -2.02562810e+00 -5.18617727e+00

-5.17221832e+00 -3.08956149e+00 -3.61940867e+00 -6.03197103e+00

-5.21686540e+00 1.77924719e+00 -3.71307196e-01 1.05386576e+00

-1.75084515e+00 -4.09861376e+00 -9.37304723e+00 -3.73861911e+00

-3.07228109e+00 -1.97830041e+00 -9.26318664e+00 -3.24813016e+00

-6.89011953e+00 -3.70117311e+00 5.48948537e-01 -1.97276564e-01

-4.81475418e+00 -9.96419591e+00 -8.37488110e+00 -6.38731101e+00]",Cello

Mình biết có thể lỗi chỗ X1. Nhưng mình sửa hoài không ra.

Output X1:

['[-4.27537437e+02 1.84112623e+01 -5.08398999e+01 5.31637161e+00\r\n -2.10733792e+01 -1.12434670e+01 -1.80090488e+01 -5.75498212e+00\r\n -6.93904306e-01 -3.86425538e-01 -1.34887390e+01 -4.86926960e+00\r\n 7.08283909e+00 2.14888911e+01 4.27220821e+00 2.68281675e+00\r\n -7.48988856e+00 -4.83437475e+00 -4.97837031e+00 4.75456743e+00\r\n 3.78830787e+00 2.42193371e+00 -5.37803284e-01 1.91941476e+00\r\n -1.30461991e+00 -5.38917506e+00 2.95554388e+00 7.73115650e+00\r\n 7.86978456e+00 6.78713944e+00 4.96348127e+00 -5.65352412e+00\r\n -8.27031422e+00 -2.86780917e-01 2.48317310e+00 -2.81628395e+00\r\n -7.71405737e+00 -2.17011549e+00 1.39035042e+00 5.37204073e+00]'

'[-3.38268402e+02 9.04193385e+01 -6.23142458e+01 1.51335214e+01\r\n -1.79282013e+01 -1.14170883e+01 -1.44048668e+01 -7.91434938e+00\r\n -2.60386278e+01 -1.33425042e+01 -1.95374448e+01 -7.54015238e+00\r\n -9.25282725e+00 -1.42761031e+01 -8.95404860e+00 -1.19382583e+01\r\n -1.71928449e+00 -5.69528325e+00 -3.24685922e+00 -5.55717358e-01\r\n -2.90710922e+00 -3.16496626e-01 2.31073633e+00 7.50894389e+00\r\n 8.43927660e+00 1.55867110e+00 -2.81710750e-01 7.12674402e-01\r\n 5.41010774e+00 8.03800790e+00 8.17677948e+00 5.93762669e+00\r\n 4.77439264e+00 9.63560502e+00 1.16040133e+01 1.08942222e+01\r\n 8.61317843e+00 5.05467430e+00 5.23978546e-01 -5.62048446e+00]'

'[-6.24420361e+02 1.05825675e+02 1.33219059e+00 8.52950436e+00\r\n -7.24284650e+00 9.61647224e+00 -1.01478913e+01 1.54396490e+01\r\n -5.98731125e+00 1.84971132e+01 8.43774569e+00 1.68807995e+01\r\n 6.56443439e+00 1.19779162e+01 -6.25981335e+00 9.84080965e+00\r\n -5.64382929e+00 2.58264885e+00 -2.00159095e+00 7.13945232e+00\r\n 6.38080413e+00 2.79037469e+00 4.53217316e+00 3.97428187e+00\r\n 1.43712071e+00 3.41697916e+00 -1.55604338e+00 -1.93967453e+00\r\n -5.66032483e+00 -4.18978478e+00 -1.28271250e+00 -1.14015339e+00\r\n -1.31668160e+00 5.41810899e-01 -2.46240243e+00 1.39396516e+00\r\n -9.86597523e-02 -4.03033817e+00 -4.41016969e+00 -1.98476223e+00]'

'[-764.52938314 8.71873263 -6.43843907 -15.01001293 -11.87936678\r\n 4.85713407 14.52481245 16.67583444 0.828503 -13.2422332\r\n -8.63103285 -2.09927411 12.74072178 17.04830133 3.82904079\r\n -4.54364402 -12.89317423 -2.72160362 4.6501101 13.58426343\r\n 12.71656223 -3.82231561 -9.36074789 -4.471898 0.80438819\r\n 11.11886213 9.47849708 5.23002199 -6.7693081 -9.3544149\r\n 1.98950657 4.5134517 11.00089496 5.36468628 -2.9164897\r\n -4.27201551 -3.70712954 4.4849342 6.91849163 5.93127321]'

'[-317.20211467 94.64278976 -29.87401214 24.54868907 -6.09035615\r\n -0.59327219 -6.94212706 20.30568222 -7.34163477 13.18906205\r\n 1.63050593 4.11504883 -8.83981319 -9.26372591 -4.08433343\r\n 0.7372643 -3.76954889 0.47261775 -6.12578635 -2.8851219\r\n 5.83740187 -2.93667644 -10.85349448 -17.17998129 -9.75256509\r\n 2.82462774 9.73483052 35.35451003 43.11077942 24.04688464\r\n 1.16382936 -15.37443287 -13.61991705 -0.33005041 17.23820257\r\n 6.06095399 -3.37540842 -12.25133004 -1.37950356 11.89579574]'

'[-3.85405043e+02 1.82486473e+02 -2.33892432e+00 3.33028570e+01\r\n 1.81593151e+01 -9.75835474e-01 -6.42208460e+00 3.98433223e-02\r\n 2.95680088e+00 -1.12593921e+00 -2.26398369e+00 -2.32506312e+00\r\n -2.47300684e+01 -9.01468486e-01 -7.79015057e+00 -4.05737105e+00\r\n -1.14309429e+01 -1.89083919e+01 -1.25810084e+01 7.34797983e-01\r\n 2.68597806e+00 1.48029000e+00 -4.17226495e+00 -9.31283741e+00\r\n -1.85426023e+00 -6.49108933e+00 1.14746636e+00 -2.65551034e+00\r\n -8.37654899e+00 -3.26042608e+00 -1.94110840e+00 -8.57032086e+00\r\n -2.65129743e+00 -1.32203655e+01 -1.84632703e+01 -8.26353278e+00\r\n -1.88503566e+00 -8.35524375e+00 -1.62149390e+01 -1.82253732e+00]'

'[-5.24920427e+02 5.94717202e+01 5.38309772e+00 1.26111679e+01\r\n 2.67003759e+00 8.02280542e+00 1.61448988e+00 6.37815119e+00\r\n 9.59449536e-01 6.12097763e+00 -2.49745585e-01 3.34996805e+00\r\n -7.38250253e-01 1.50706689e+00 -2.10623382e-01 1.81277624e+00\r\n -8.95322039e-01 3.65742001e+00 -6.42121196e-01 2.33773064e-01\r\n 1.05758933e+00 1.10403540e+00 9.32153897e-01 1.19968231e-01\r\n 2.08067929e+00 9.60565473e-02 7.88911438e-01 2.47309143e+00\r\n 3.21383146e+00 9.77452626e-02 -9.99862741e-02 5.02418510e-01\r\n -3.06560278e-01 -4.77943679e-01 -1.30247224e+00 -1.57172712e+00\r\n -3.50584169e+00 -3.74525384e+00 -1.26045936e+00 -2.23543676e+00]'

'[-280.47011806 87.04624775 1.51867678 28.7544023 8.51132611\r\n 18.14832172 0.52928007 11.7828036 3.75681467 5.85570039\r\n -3.73229661 -9.33423393 -9.80057998 -1.44813319 -9.81222806\r\n -2.71234692 -7.2027108 -1.04184486 -4.2185896 -0.66948758\r\n -5.41804403 -5.20026059 -4.9516565 -2.83758151 -3.87877157\r\n -0.90021028 1.58843783 -0.96575431 -2.81714288 -1.31403267\r\n -3.04378508 -4.39345396 -4.46993251 -4.4497167 -3.17821052\r\n -4.55557494 -6.55964337 -3.58372651 -3.26926145 -3.99807356]'

'[-455.31825766 24.64795147 -59.7479186 6.57805275 -7.34159473\r\n -0.75412168 -9.78392258 -6.14321207 -22.97267742 -11.33437337\r\n -10.24463331 13.61014451 22.3439737 38.85788626 45.62329334\r\n 32.3592885 6.6244562 -13.46206869 -4.15417543 -0.56052938\r\n 4.28166291 -19.09338248 -32.89559066 -11.32265161 25.509636\r\n 22.46449093 3.71999159 18.30566019 39.33050818 27.53211081\r\n -13.85470948 -16.00923342 8.10740382 7.70651575 -3.56247767\r\n 2.3622675 -3.03281754 -24.13676426 -2.25025972 31.24887203]'

'[-4.16342246e+02 2.39453186e+01 8.25088021e+00 1.77851446e+01\r\n -1.66942222e+01 2.56676681e+01 -1.37727697e+01 1.78291707e+01\r\n -8.90931038e+00 1.61025820e+01 -9.29215844e+00 7.44784387e+00\r\n -1.52496814e+01 8.44466390e+00 -1.13882392e+01 4.84605317e+00\r\n -8.73099370e+00 6.03583024e+00 -8.63527381e+00 4.14996525e+00\r\n -9.21384412e+00 -2.13100241e+00 -1.00770288e+01 -1.97386608e+00\r\n -7.85499989e+00 -2.73425950e-01 -4.74793935e+00 -2.76770417e-01\r\n -7.36556228e+00 -3.21462230e+00 -5.16336007e+00 -1.72343303e+00\r\n -4.85442690e+00 -2.04850630e+00 -2.97923671e+00 5.15375491e-01\r\n -3.80343525e+00 -1.62268470e+00 -4.35805247e+00 -1.16887440e+00]'

'[-5.19274594e+02 -2.71449943e+01 -2.83462511e+01 1.66401010e+01\r\n -6.06738251e+00 1.47208344e+01 -1.07641763e+01 3.56846923e+00\r\n -1.62394525e+01 2.58537802e+00 -4.56741501e+00 2.34174948e+00\r\n -1.59728309e+01 7.78185987e-01 -1.29608242e+01 -3.86139441e+00\r\n -6.90194615e+00 5.39548086e+00 -2.40081456e+00 3.20240751e+00\r\n -3.26320455e+00 2.60146420e+00 1.02619756e+00 5.97824882e+00\r\n -1.17367641e+00 1.66920391e+00 1.77947348e+00 7.43221466e+00\r\n 2.11138313e+00 5.34002900e+00 2.16695357e-01 1.53387821e-01\r\n -3.44682113e+00 -1.31276163e+00 -2.47808711e+00 2.25855008e+00\r\n -7.96137488e-01 -4.80598658e+00 -6.78338005e+00 -3.44156603e-01]'

'[-5.11254565e+02 5.10915827e+00 -3.91603563e+01 3.72906926e+01\r\n -5.41286280e+00 2.45443313e+01 -1.01455891e+01 1.54803725e+01\r\n 3.11070726e-01 1.12446725e+01 -9.53414668e+00 1.25973173e+01\r\n -6.58191956e+00 7.64917415e+00 -6.31176949e+00 5.63290461e+00\r\n -2.15468728e+00 3.24888960e+00 3.58324877e-01 3.32424837e+00\r\n -3.28164742e+00 5.00128487e+00 -2.21771250e+00 4.21021373e+00\r\n -2.17697709e+00 6.51156093e+00 -2.44081838e+00 5.74518198e+00\r\n -1.71610276e+00 5.09435083e+00 -1.42065432e+00 2.99195342e+00\r\n -2.19029010e-01 2.91630452e+00 3.12646255e-01 2.46836080e+00\r\n 9.40062335e-01 2.88474875e+00 7.29112169e-01 1.91076338e+00]'

'[-2.70970613e+02 4.41825471e+01 -2.71657128e+01 8.34881285e+00\r\n -1.69852725e+01 4.41621599e-01 -1.36084170e+01 3.30848527e+00\r\n -1.16992683e+01 2.50560480e+00 -1.27283351e+01 2.17467185e+00\r\n -1.40992865e+01 -4.51612206e+00 -1.56652726e+01 -5.17631284e+00\r\n -1.21148181e+01 -4.30362719e+00 -1.05397332e+01 -2.37359875e+00\r\n -7.50444779e+00 -4.63788263e-01 -2.79868337e+00 4.91808954e-02\r\n -6.10196649e+00 -9.46571464e-01 -4.50134621e+00 4.45210813e-01\r\n -1.57192046e+00 -1.37422032e+00 -3.75160744e+00 -4.97624069e-01\r\n -2.39860514e+00 2.13355452e-02 -3.14399860e+00 -3.41856172e+00\r\n -3.71535928e+00 -1.67329311e+00 -2.28692094e+00 9.12734995e-01]'

'[-5.32791881e+02 5.53392756e+01 -4.23844965e+00 4.32248864e+01\r\n -6.68890584e+00 2.10324813e+01 -6.68072132e+00 1.93206158e+01\r\n -5.93869390e+00 1.00728757e+01 -1.20915412e+01 8.35914716e+00\r\n -6.83334787e+00 1.04005723e+01 -1.00428315e+01 3.63580785e+00\r\n -7.01152778e+00 5.48456909e+00 -4.12633407e+00 2.93005015e+00\r\n -1.10928915e+00 4.44012759e+00 1.01764271e+00 -1.38631256e+00\r\n 2.33545777e+00 1.66915380e+00 -6.74666616e-01 -3.49031360e+00\r\n 1.15497161e+00 4.98827185e-01 -2.92391846e+00 1.82407029e-03\r\n 1.12726490e+00 -3.68309456e+00 -9.90012043e-01 1.02524714e+00\r\n -2.01671215e+00 -1.18087267e+00 -6.58942356e-01 -1.04852341e-01]'

'[-3.88631500e+02 1.36379931e+02 -1.77521786e+01 9.47604936e+00\r\n 1.07755710e+01 1.23910543e+01 -2.61411621e+00 1.80973210e+00\r\n -5.35874886e+00 3.42813978e+00 -3.19641142e+00 -5.10204444e+00\r\n -1.52943596e+01 -1.13649575e+01 -2.02562810e+00 -5.18617727e+00\r\n -5.17221832e+00 -3.08956149e+00 -3.61940867e+00 -6.03197103e+00\r\n -5.21686540e+00 1.77924719e+00 -3.71307196e-01 1.05386576e+00\r\n -1.75084515e+00 -4.09861376e+00 -9.37304723e+00 -3.73861911e+00\r\n -3.07228109e+00 -1.97830041e+00 -9.26318664e+00 -3.24813016e+00\r\n -6.89011953e+00 -3.70117311e+00 5.48948537e-01 -1.97276564e-01\r\n -4.81475418e+00 -9.96419591e+00 -8.37488110e+00 -6.38731101e+00]']

Mong mọi người giúp đỡ.

2 CÂU TRẢ LỜI

Mình sửa 1 số chỗ trong phần đầu code của bạn, bạn chạy lại thứ nhá.

import IPython.display as ipd

from scipy.io import wavfile

import os

import pandas as pd

import matplotlib.pyplot as plt

import random

import numpy as np

import time

import ast

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D

from keras.optimizers import Adam

from sklearn import metrics

from sklearn.preprocessing import LabelEncoder

from keras.utils import np_utils

import re

#doc du lieu

temp1 = pd.read_csv('test.csv')

y1 = np.array(temp1.label.tolist())

X1 = np.array([ast.literal_eval(re.sub('\s+', ',', x)) for x in temp1.feature.tolist()])

lb = LabelEncoder()

y1 = np_utils.to_categorical(lb.fit_transform(y1))

#huan luyen mo hinh

num_labels = y1.shape[1]

model = Sequential()

model.add(Dense(256, input_shape=(40,)))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(256))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(num_labels))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy', metrics=['accuracy'], optimizer='adam')

model.fit(X1, y1, batch_size=32, epochs=200, validation_split=0.1, shuffle=True)

Ok bạn. Cảm ơn bạn nha.

Mình đã test trên tập dữ liệu thì ok. Nhưng khi mình test tập dữ liệu lớn hơn thì xảy ra lỗi

Đây là tập dữ liệu lớn hơn mình test: https://drive.google.com/file/d/1nNz7og0AMYx9A6vrf5M1Nq9woAsz_10r/view?usp=sharing Hy vọng bạn có thể giúp mình.

Để sửa lỗi trên, bạn thay dòng này:

X1 = np.array([ast.literal_eval(re.sub('\s+', ',', x)) for x in temp1.feature.tolist()])

Bằng dòng này là được:

X1 = np.array([ast.literal_eval(re.sub('(\d)\s+', r'\1,', x)) for x in temp1.feature.tolist()])

Vấn đề ở đây chính là cách bạn lưu trữ dữ liệu tiền xử lý ở dạng string nên mình phải xử lý theo kiểu string, nhưng cách lưu trữ dữ liệu lại không thống nhất dẫn đến việc code chạy trên tập dữ liệu nhỏ thì đúng là sang 1 tập lớn hơn lại sai(Vì trong tập dữ liệu lớn có 1 sample được lưu khác với các sample còn lại và hôm qua mình không biết có điều đó). Bạn để ý dòng 2024 trong dữ liệu sẽ nhận thấy điều này.

Ok bạn. Lỗi đó có thể lúc mình rút trích mfcc mình dùng to_csv để lưu trữ. Nên ma trận được lưu theo dạng string dẫn đến 1 số sample bị lỗi. Mình đã thử lưu ma trận bằng numpy vào 1 file .npy theo cách của bạn @hoangdinhthoi thì kết quả cho ra cũng chính xác. Cảm ơn @hoangdinhthoi @QuangPH nhiều nha.

Bạn cho mình xin file .csv đc không? Không thì bạn cho mình xin shape của X1 và y1 với. Tại keras nó báo lỗi về shape của data bạn truyền vào mạng.

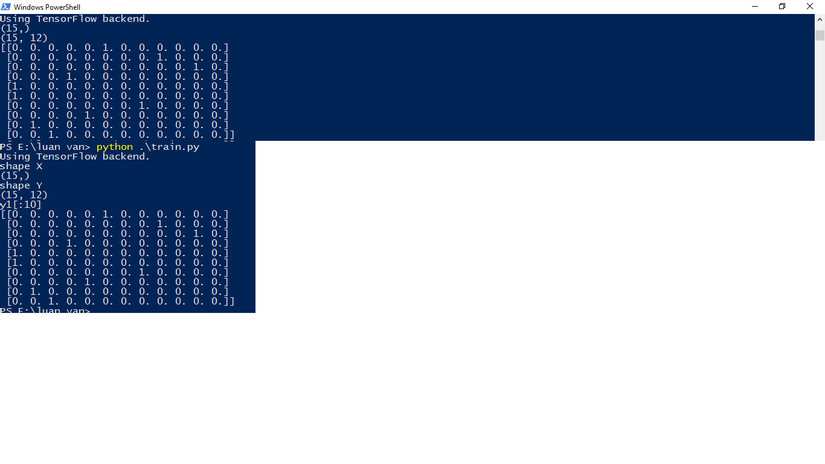

shape X (15,) , shape Y (15, 12)

@Phuoc Lỗi này của bạn là do data lưu trong file .csv đang có kiểu là string. Lần sau lúc bạn làm dữ liệu thì có thể dùng numpy để lưu luôn ma trận lại chứ k nên convert nó sang string rồi lưu vào csv làm gì. Theo mình thấy trong file của bạn thì có 15 mẫu dữ liệu, mỗi 1 mẫu là 1 vector 40 chiều (number of features=40), như vậy thì có thể lưu luôn ma trận 15x40 bằng numpy vào 1 file .npy cho nhanh. Còn về phần label, mình thấy bạn đang để là ma trận 15x12, làm như vậy cũng đc, nhưng bạn có thể bỏ qua bước convert sang one-hot và lúc compile model thì thay "categorical_crossentropy" bằng "sparse_categorical_crossentropy".

@hoangdinhthoi ok bạn. Để mình test thử cách của bạn.