Why We Need Modern Big Data Integration Platform

Bài đăng này đã không được cập nhật trong 4 năm

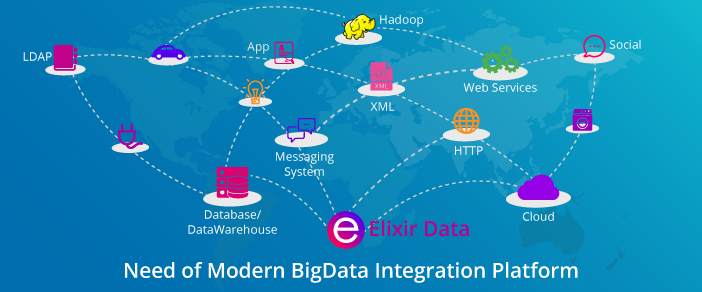

Data is everywhere and we are generating data from different Sources like Social Media, Sensors, API’s, Databases.

Data is everywhere and we are generating data from different Sources like Social Media, Sensors, API’s, Databases.

Healthcare, Insurance, Finance, Banking, Energy, Telecom, Manufacturing, Retail, IoT, M2M are the leading domains/areas for Data Generation. The Government is using BigData to improve their efficiency and distribution of the services to the people.

The Biggest Challenge for the Enterprises is to create the Business Value from the data coming from the existing system and from new sources. Enterprises are looking for a Modern Data Integration platform for Aggregation, Migration, Broadcast, Correlation, Data Management, and Security.

Traditional ETL is having a paradigm shift for Business Agility and need of Modern Data Integration Platform is arising. Enterprises need Modern Data Integration for agility and for an end to end operations and decision-making which involves Data Integration from different sources, Processing Batch Streaming Real Time with BigData Management, BigData Governance, and Security.

BigData Type Includes:

-

What type of data it is

-

Format of content of data required

-

Whether data is transactional data, historical data or master data

-

The Speed or Frequency at which data made to be available

-

How to process the data i.e. whether in real time or in batch mode

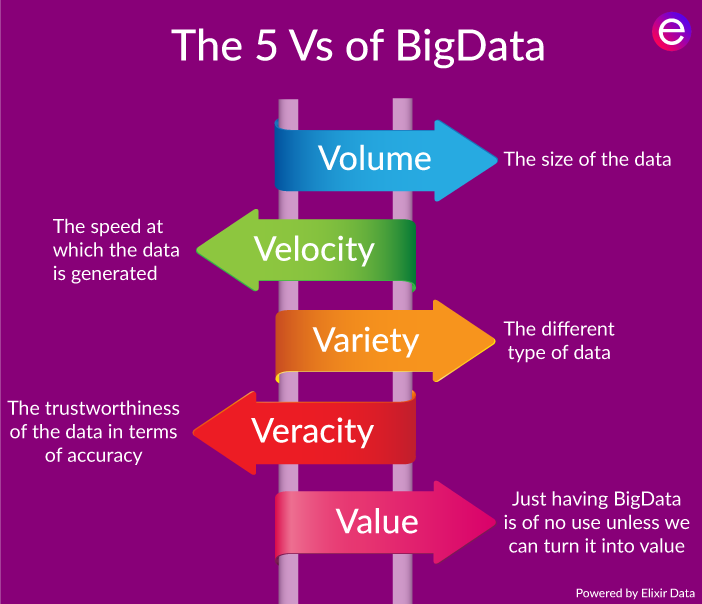

5 V’s to Define BigData

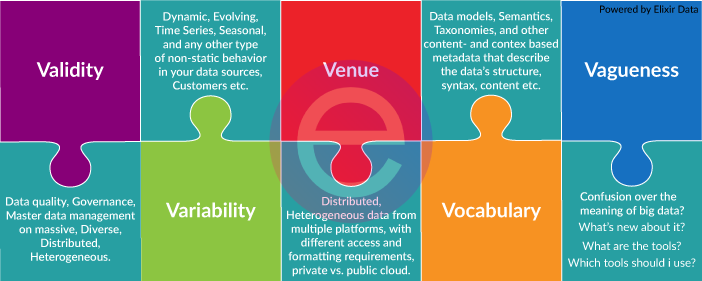

Additional 5V’s to Define BigData

Data Ingestion and Data Transformation

Data Ingestion comprises of integrating Structured/unstructured data from where it is originated into a system, where it can be stored and analyzed for making business decisions. Data Ingestion may be continuous or asynchronous, real-time or batched or both.

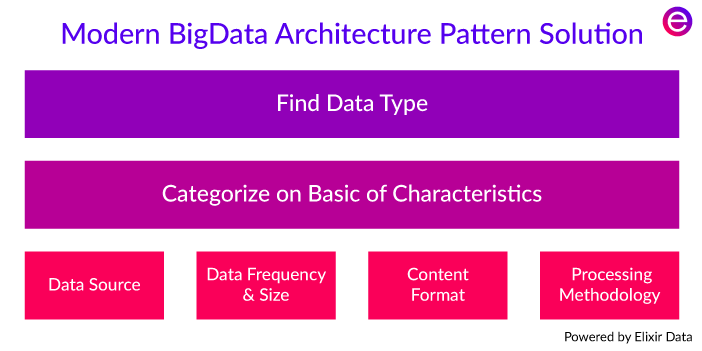

Defining the BigData Characteristics: Using Different BigData types, helps us to define the BigData Characteristics i.e how the BigData is Collected, Processed, Analyzed and how we deploy that data On-Premises or Public or Hybrid Cloud.

-

Data type: Type of data

-

Transactional -

Historical -

Master Data and others -

Data Content Format: Format of data

-

Structured (RDBMS) -

Unstructured (audio, video, and images) -

Semi-Structured -

Data Sizes: Data size like Small, Medium, Large and Extra Large which means we can receive data having sizes in Bytes, KBs, MBs or even in GBs.

-

Data Throughput and Latency: How much data is expected and at what frequency does it arrive. Data throughput and latency depend on data sources:

-

On demand, as with Social Media Data -

Continuous feed, Real-Time (Weather Data, Transactional Data) -

Time series (Time-Based Data) -

Processing Methodology: The type of technique to be applied for processing data (e.g. Predictive Analytics, Ad-Hoc Query and Reporting).

-

Data Sources: Data generated Sources

-

The Web and Social Media -

Machine-Generated -

Human-Generated etc -

Data Consumers: A list of all possible consumers of the processed data:

-

Business processes -

Business users -

Enterprise applications -

Individual people in various business roles -

Part of the process flows -

Other data repositories or enterprise applications

Major Industries Impacted with Big Data

What is Data Integration?

Data Integration is the process of Data Ingestion - integrating data from different sources i.e. RDBMS, Social Media, Sensors, M2M etc, then using Data Mapping, Schema Definition, Data transformation to build a Data platform for analytics and further Reporting. You need to deliver the right data in the right format at the right timeframe.

BigData integration provides a unified view of data for Business Agility and Decision Making and it involves:

-

Discovering the Data

-

Profiling the Data

-

Understanding the Data

-

Improving the Data

-

Transforming the Data

A Data Integration project usually involves the following steps:

-

Ingest Data from different sources where data resides in multiple formats.

-

Transform Data means converting data into a single format so that one can easily be able to manage his problem with that unified data records. Data Pipeline is the main component used for Integration or Transformation.

-

MetaData Management: Centralized Data Collection.

-

Store Transform Data so that analyst can exactly get when the business needs it, whether it is in batch or real time.

Why Data Integration is required

Make Data Records Centralized: As data is stored in different formats like in Tabular, Graphical, Hierarchical, Structured, Unstructured form. For making the business decision, a user has to go through all these formats before reaching a conclusion. That’s why a single image is the combination of different format helpful in better decision making.

-

Format Selecting Freedom: Every user has different way or style to solve a problem. User are flexible to use data in whatever system and in whatever format they feel better.

-

Reduce Data Complexity: When data resides in different formats, so by increasing data size, complexity also increases that degrade decision making capability and one will consume much more time in understanding how one should proceed with data.

-

Prioritize the Data: When one have a single image of all the data records, then prioritizing the data what's very much useful and what's not required for business can easily find out.

-

Better Understanding of Information: A single image of data helps non-technical user also to understand how effectively one can utilize data records. While solving any problem one can win the game only if a non-technical person is able to understand what he is saying.

-

Keeping Information Up to Date: As data keeps on increasing on daily basis. So many new things come that become necessary to add on with existing data, so Data Integration makes easy to keep the information up to date.

Continue Reading This Article At: XenonStack.com/Blog

All rights reserved