NeuralNotes — Music generation using Transformers

Bài đăng này đã không được cập nhật trong 2 năm

Introduction

Currently, I have pursued a topic that using deep learning for automatic music generation during my bachelor thesis at VGU. Indeed, because of its generality, deep learning and more generally machine learning manners are being utilized to produce musical material.

In my opinion, exploiting potentials of deep learning on music generation task, it allows people to create new and original music without having to start from scratch, saving time and effort in the creative process. Furthermore, it helps people to explore different musical possibilities and ideas. However, it is difficult to deny that current AI-generated music products do not bring the harmony expected in a piece of music, such as timbre compatibility, note repetition, or rhythm, melodies and wandering music have no direction, are imitative and have the risk of plagiarism.

When talking about development, it is talking about a whole process. With the current rate of technology development, I do believe that an AI can compose music at the same level as an artist is a conceivable prospect.

In this post, I would talk about what I have achieved and what I have done during the process. The content of the post will be:

Implementation: I will discuss about libraries, frameworks, a brief introduction about a deep learning architecture that I used. System Architecture: I would go into detail about the system I propose so that users of all computer skill levels can interact with and experience the model. Future works and Conclusion: I would give several comments on my proposed method. I would welcome you to experience my project.

Implementation

To develop the project, since Python is the programming language the I used, I choose:

- FastAPI to deploy web application. It has very high performance, also, it is designed to be simple to understand and utilize, able to lessen the quantity of redundant code. Recently, ML-projects that used FastAPI are growing.

- Jinja, which is a well-liked option for template engines for Python web frameworks.

- MongoDB. It is a NoSQL database so does not have a fixed schema, making it simple to add and remove fields from documents without changing the database schema.

- PyTorch, which is an open-source machine learning framework. It is known for its ease of use and flexibility. It is also very efficient, making it a good choice for production environments.

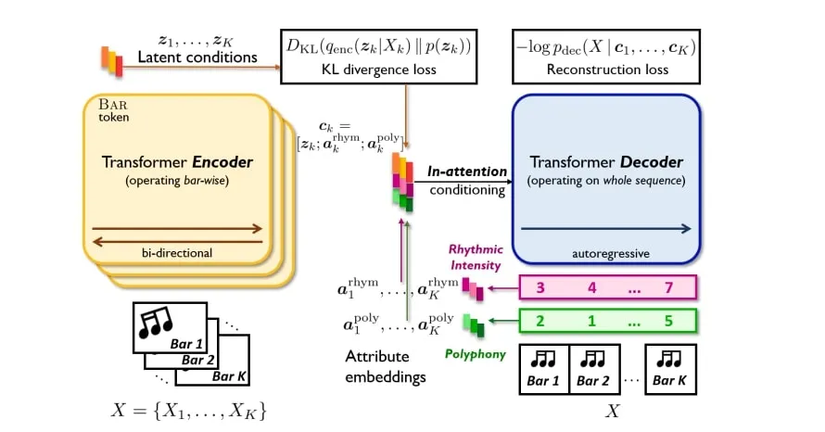

About the core technology which is deep learning based, responsible for generating music, I choose MuseMorphose. The MuseMorphose architecture is composed of a KL divergence regularized latent space for the representation of musical bars between a standard Transformer encoder acting at the bar level and a standard Transformer decoder accepting segment-level conditions via the in-attention mechanism.

In my settings, since the Decoder is in charge of generation, I makes a little modification from the its positional encoding, allowing it achieves better performances.

One more reason that I choose this model is because it also introduce a new methods to represent music in an symbolical approach other than MIDI, namely, REMI (Revamped MIDI). REMI has more attributes than its ancestor, it was born to benefit Transformer-based models from the human knowledge of music.

System Architecture

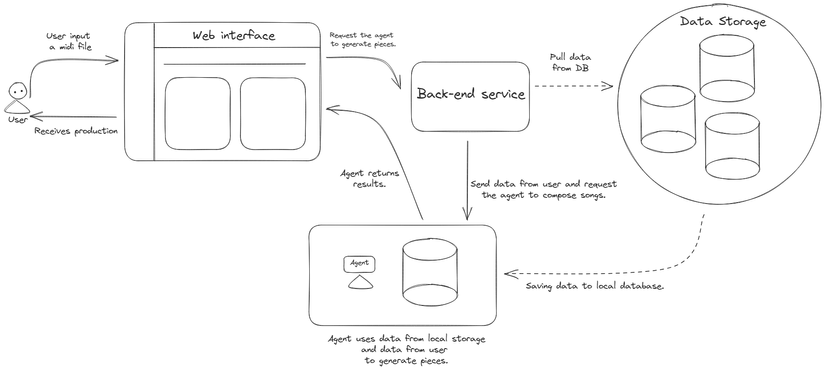

The flow of application is simple to understand. The user makes a request from a web interface to a back-end service. The agent is prompted, and as a result, songs are generated and returned to the user.

As can be seen from the Figure, within the procedure, there are two descriptions that are depicted by the solid and dashed lines. I want the system to utilize the data that it receives over time. The ”Data Storage” would be timed, automatically update the local data, and broaden the range of the data.

- Updating the data set at a local point provides diversity in songs and music genres, improving the user experience.

- The computing and storage should be located near the data source. To the best of my understanding, this deployment can minimize latency by putting computing and storage closer to the data sources and can increase performance by minimizing the distance that data needs to travel before being processed.

- It enhances security since attack strategies that may be used to exploit data during transmission are removed by keeping data near the source.

The Figure also depicts three clusters, which I have labeled as the application, the lab, and the storage. The application has a 3-tier architecture which includes an interface for user communication, the application server, and the data source. The lab includes the agent and local storage, which in my configuration is a directory that stores receive, result, and resource data. The storage’s primary function is to hold data.

For instance, a person with extensive experience in model research will work in ”the lab,” where their only concentration is on investigating and making use of models and data to produce successful outcomes. Alternately, ”the storage” will be the location where users or engineers skilled in information processing use their understanding of system users to analyze recent activities, musical genres the user appears to be interested in, or whether the user’s musical preferences are polarized or concentrated in particular profiles

Early deployment of the system may only require a small amount of knowledge, but as it is developed, the complexity of the required expertise will distinguish the skills of developers in each cluster. In other words, the system has to be separated into smaller clusters for future development due to the disparity in knowledge across each module. This makes the division into three clusters reasonable, making the system easy to manage, maintain, and grow.

Future works and Conlusion

Overall, I have proposed a method that using deep learning to generate music automatically, also, an application architecture that ensures a diversity in musical data, so that, during the generation process, users can experience different tastes from a given piece of music.

In the future, I would focus on employing RLHF to my application, so that my agent can produce music “locally”.

Thank you for reading this article; I hope it added something to your knowledge bank! Just before you leave:

👉 Be sure to press the like button and follow me. It would be a great motivation for me.

All rights reserved