Using Copilot to generate Unit Test

Bài đăng này đã không được cập nhật trong 2 năm

🇻🇳 Vietnamese version 🇻🇳

Introduction

After more than 2 years since its launch, Copilot is undoubtedly no longer unfamiliar to our coding community. With praises like change the game, revolutionizing developer collaboration, reshape the way we write code, intelligent suggestions and more, it's safe to say that many of us are curious to see what Copilot can truly achieve.

Is it possible to become a two-button programmer (just using Tab and Enter) for only $10 a month? Or perhaps, can we be replaced by AI in the future? I have many questions swirling in my mind.

Fortunately, our company is not oblivious to the trends. They have given us the opportunity to experience Copilot, which has helped answer some of my questions.

During the demo, I applied Copilot to our current project (which is in the phase of writing unit tests). In this article, let's explore Copilot together and see if its unit testing capabilities are as amazing as rumored.

The main topics we'll cover include:

- What features does Copilot offer?

- How does Copilot assist us in writing unit tests?

- Conclusions and observations.

Alright, let's get started! 🚀

Copilot features

First, let's take a look at some of Copilot's features to see how the coding community is using this personal AI pair programmer.

- Suggest Code: This is Copilot's most powerful feature. Copilot can read and understand what you're writing, then provide suitable code suggestions. It's like having a coding companion by your side.

- Explain Code: This feature provides detailed explanations for a piece of code, breaking it down step by step. It's particularly useful for understanding old code or solutions found on StackOverflow.

- Copilot Chat: Copilot can provide advice and answer questions right within the editor, eliminating the need to switch back and forth between your browser and the editor, as is the case with ChatGPT and other AI tools. Integrated into the editor, Copilot Chat can better understand project context to offer more relevant answers.

- Copilot Labs: An independent project alongside Github Copilot, this tool is widely used because of its useful features, including code refactoring, bug fixing, debugging, and commenting.

- Others

- Translate from one language to another.

- Explore Library: Use it when you want to learn a new language or library. Copilot can suggest syntax, methods, and more relevant to your current language or library.

- Ongoing experimental projects: Copilot CLI, Copilot for PR, and more.

- Jokes =)): I only discovered this feature when reading reviews about Copilot. Try typing "Database tables walk into a bar" in the editor and see what happens =))

With Generating Unit Tests, the Github Copilot team is also working on an exciting project that's worth looking forward to.

Automate automated testing.

Missed a test? GitHub Copilot can point out missing unit tests and generate new test cases for you after every change.

While waiting for this feature, today we'll explore how, with the current version, Copilot can assist us in writing tests. Let's see how much help Copilot can provide when it comes to test writing

We don't have time to write tests ..

Copilot and Unit Tests

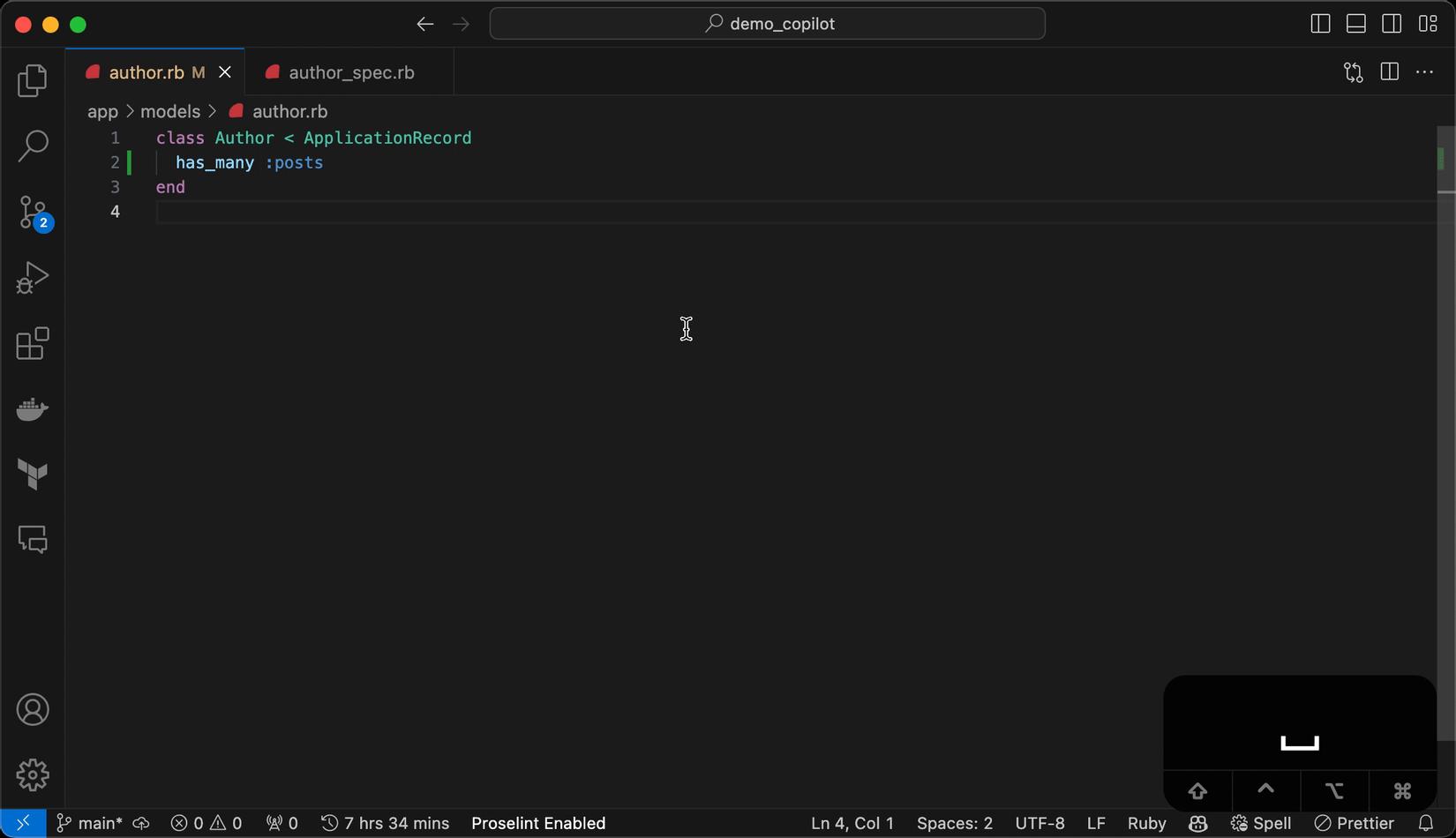

To illustrate this article, I've created a simple Rails project with two main models: Author and Post, using common testing libraries like rspec, factory-bot, shoulda-matcher, and faker.

In this article, I'm using VSCode. To install Copilot in VSCode, you can refer to the instructions here.

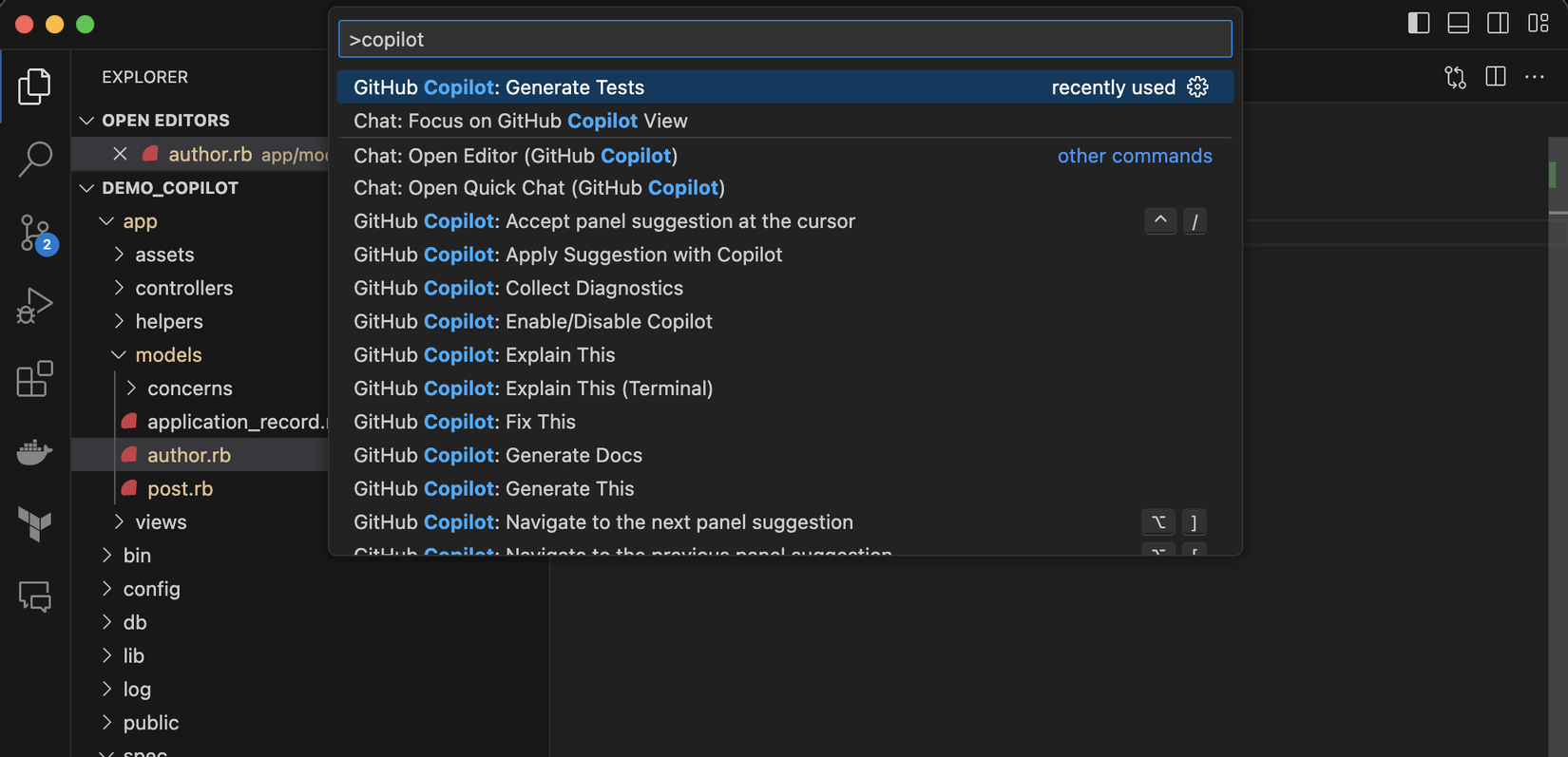

To generate tests using Copilot in VSCode, there are three methods:

-

Use

Cmd + Shift + P(orCtrl + Shift + Pon Windows) and enterGenerate Tests.![Pasted image 20231017232439.png]()

-

Write prompts or code as usual, and Copilot will provide suggestions. You can choose to accept or discard them.

-

Use Copilot Chat to request Copilot Chat to generate tests.

In this article, I will use all three methods to compare their advantages and disadvantages and determine when to use each of them.

Model

Can Copilot write tests for all regular code segments inside a model?

To answer this question, let's try to list what we typically write in a model file:

Associations, Validations, Scopes, Instance methods, Class methods, Callbacks, Enum, Delegate, Custom Validations, ...

Depending on the project, there might be additional code segments, but in my experience, the majority of model files contain information like this.

Now, let's begin the experiment:

- Add more associations, validations, scopes, etc., to the model file.

- Generate tests using Copilot.

Associations

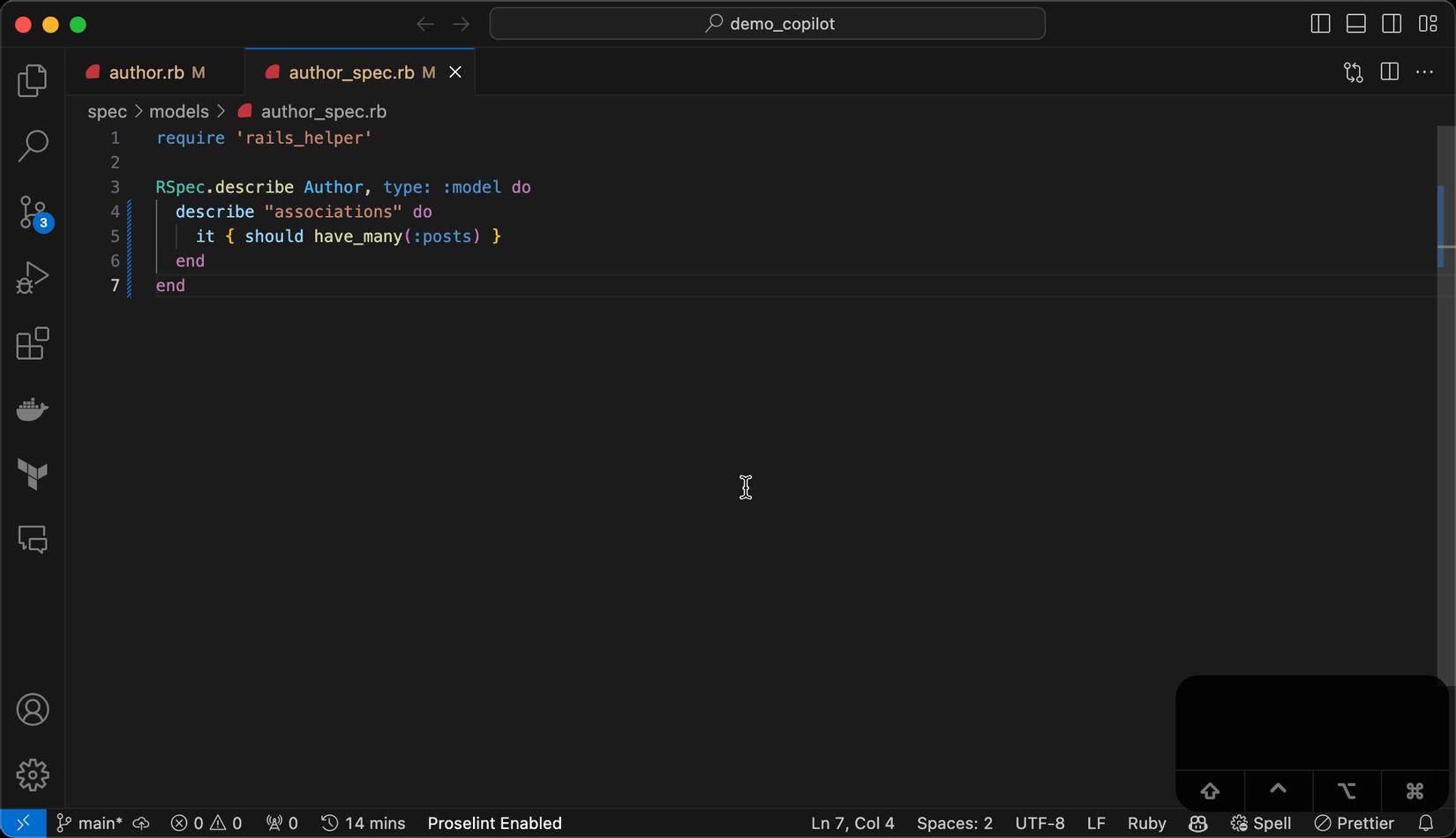

After adding associations has_many :posts and belongs_to :author to the respective model, it's time to let Copilot do the work!

Nice!

- Pros:

- Code is generated quite quickly, and developers can review it beforehand.

- Knows how to add it to the corresponding spec file.

- Understands the usage of the

shoulda-matchersyntax.

- Cons:

- When you hit apply, Copilot doesn't recognize the existing content and appends the generated code at the end of the file.

For the belongs_to association, I manually write the test in the test file.

- Cons:

- The initial code suggestion is somewhat unrelated. =))

- Pros:

- After typing

describe, Copilot provides quite a good suggestion, generating tests for theassociationsand the rest.

- After typing

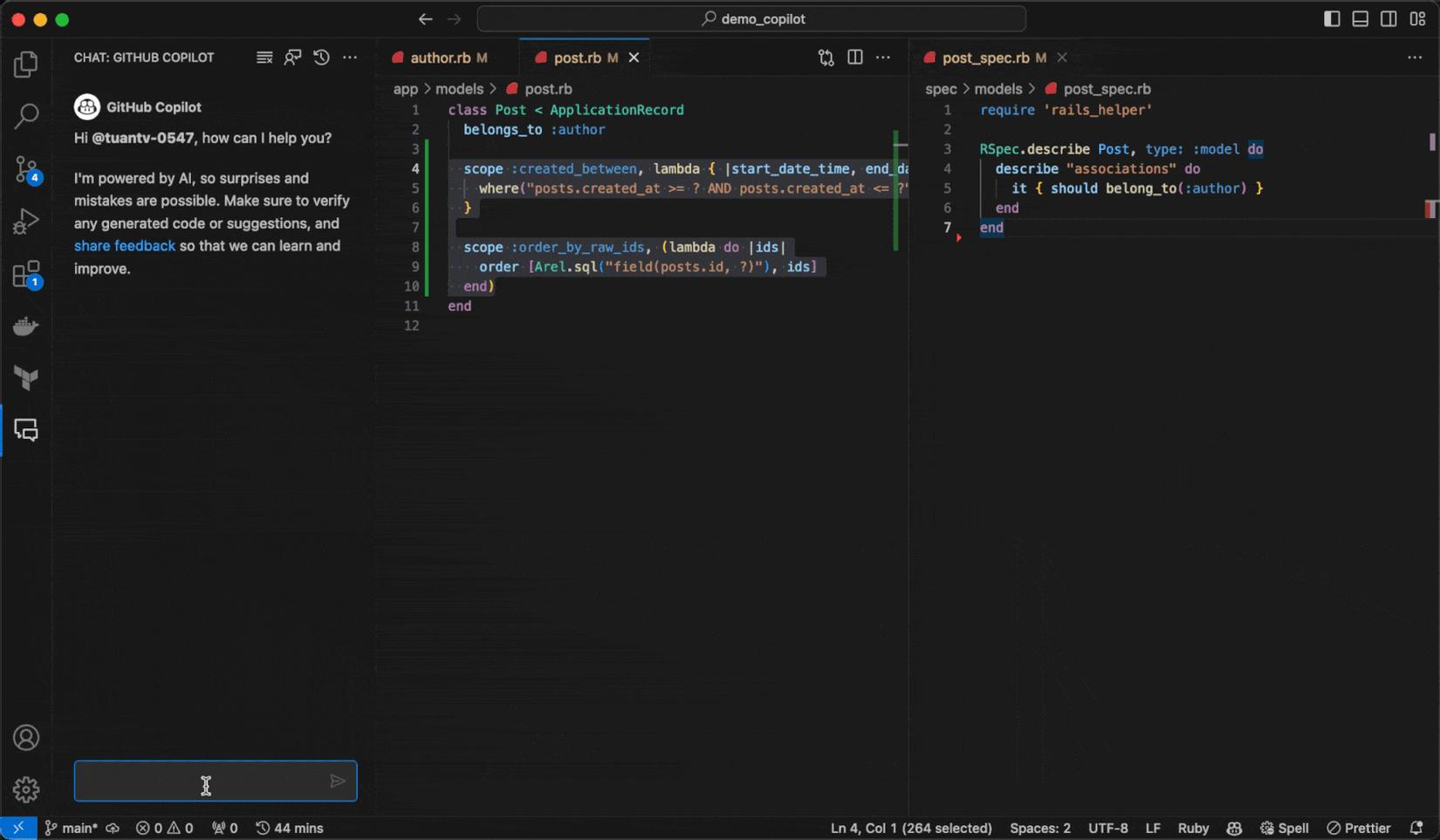

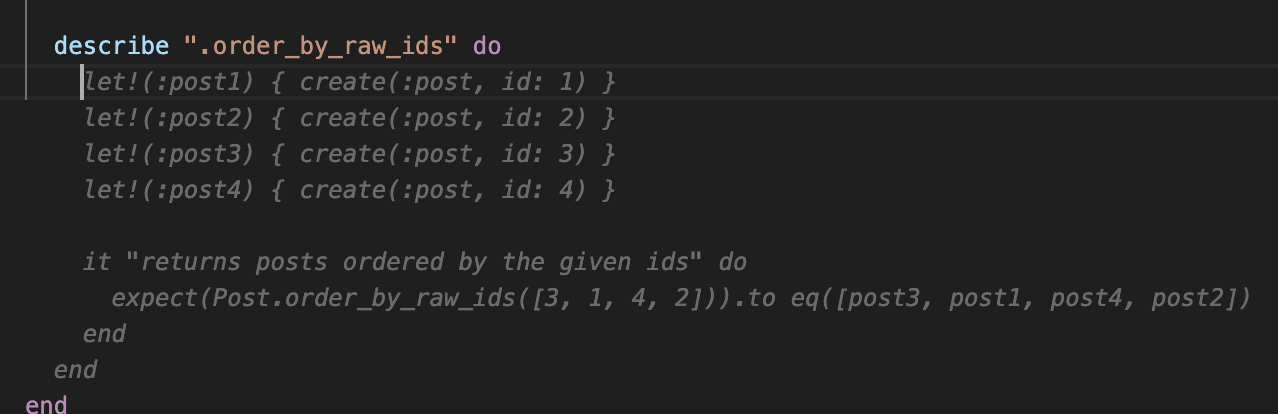

Scopes

I chose two scopes to test:

- Retrieve

postscreated within the time range fromstart_datetoend_date. - Order posts by raw ids.

This time, I used Copilot Chat to generate the tests.

Hmm. Even though the "selected code" contains two scopes, for some reason, Copilot only generated a test for one scope. 🤔

For the remaining scope, I had to type it manually. However, Copilot also understood the context and generated the test quite well.

- Pros:

- In terms of format, the generated test is quite standard, including data for

includeandnot include. - Knows how to create fake data using the

FactoryBotsyntax.

- In terms of format, the generated test is quite standard, including data for

- Cons:

- It doesn't generate tests for all the "selected code."

- A significant downside is that when I run the test, the test case "returns posts created between the given dates" fails =)) (The reason is due to time dependency, the lag time between the test data creation and the test case execution).

So, we can see that Copilot can generate tests, but whether they are correct or runnable is not guaranteed =))

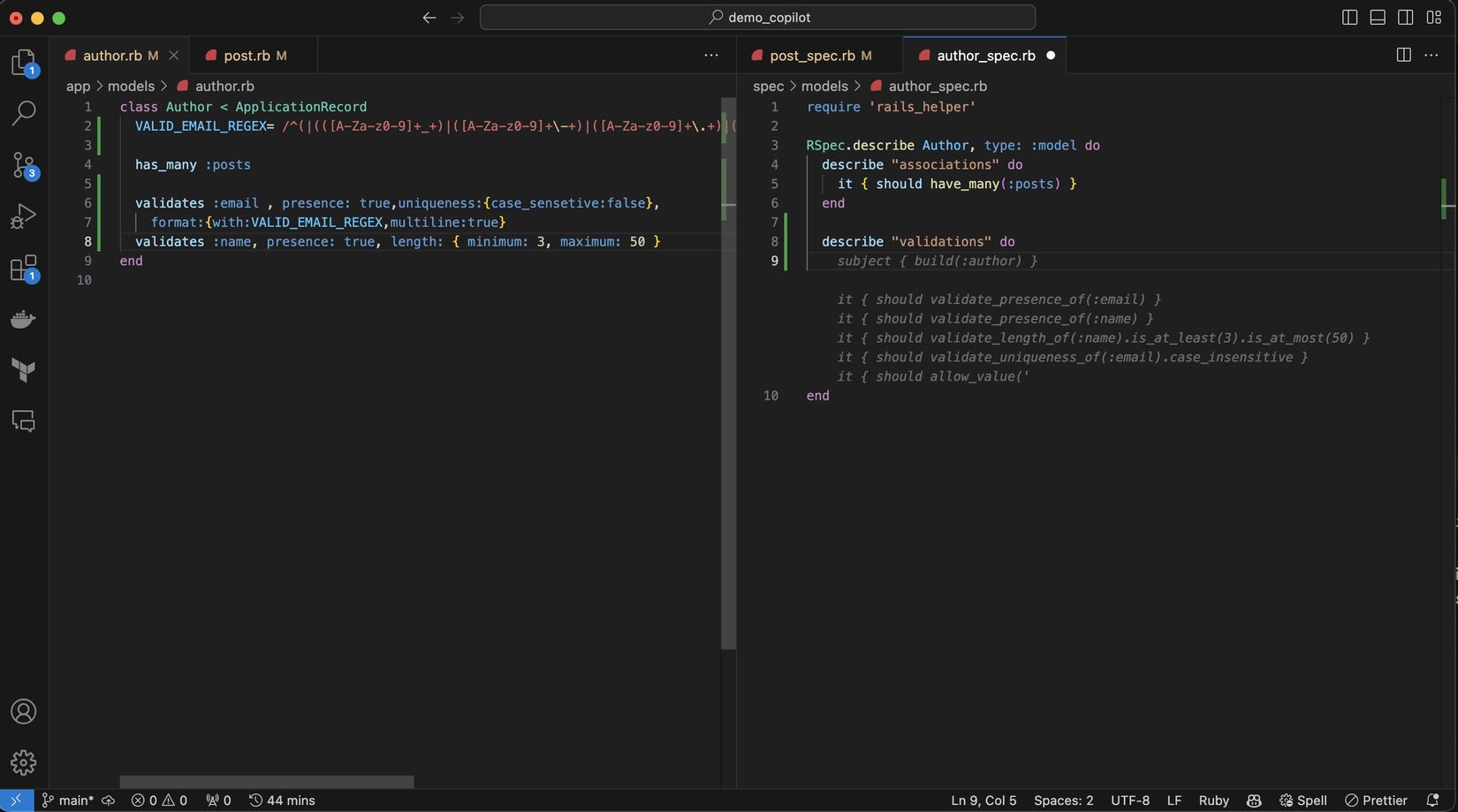

Validations

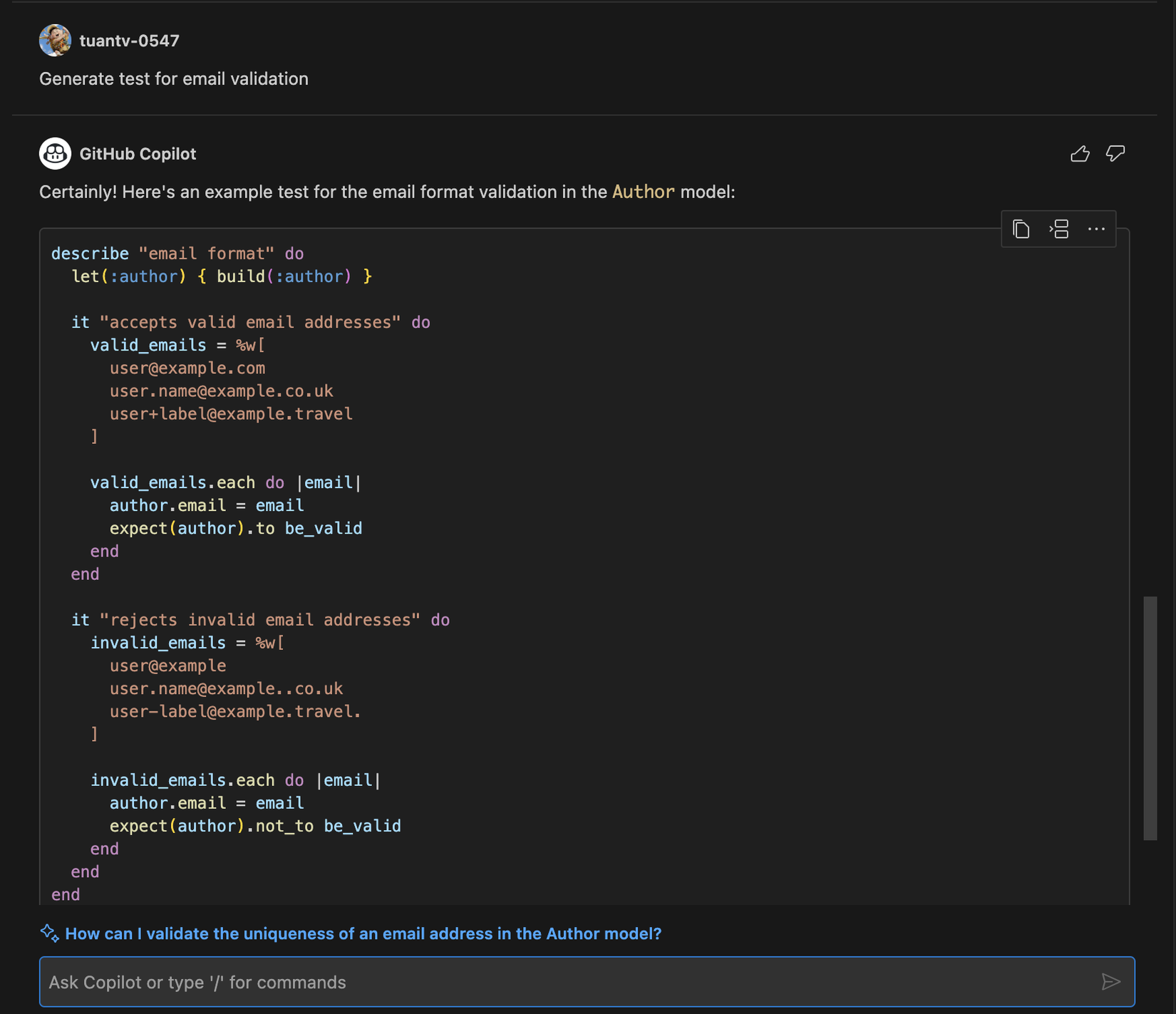

For testing Validations, I chose a simple validation for the "name" field and an email regex for email validation.

VALID_EMAIL_REGEX= /^(|(([A-Za-z0-9]+_+)|([A-Za-z0-9]+\-+)|([A-Za-z0-9]+\.+)|([A-Za-z0-9]+\++))*[A-Za-z0-9]+@((\w+\-+)|(\w+\.))*\w{1,63}\.[a-zA-Z]{2,6})$/i

validates :email , presence: true, uniqueness: { case_sensitive: false },

format: { with: VALID_EMAIL_REGEX, multiline: true }

validates :name, presence: true, length: { minimum: 3, maximum: 50 }

At this point, I started to notice a little issue. Copilot suggested some basic tests. The test part for email was left empty. Even though I had tabbed to accept the current results, Copilot still didn't show any additional suggestions.

Despite trying to write a comment: # Read VALID_EMAIL_REGEX in author.rb model, generate tests for email validations based on this regex, no new tests were generated.

I had to request through Copilot Chat to get results.

I think Copilot deserves credit here because it knew to use build(:author) instead of create(:author), which would save some time running tests. ⏱️

Others

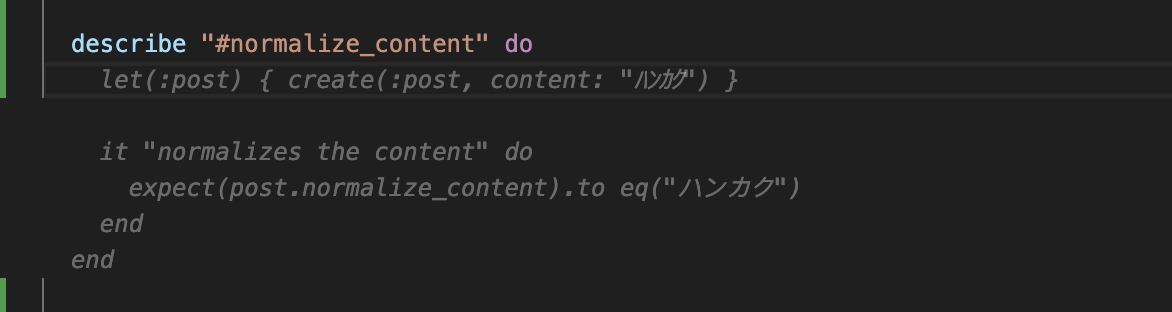

I tested some other parts such as instance methods, class methods, enums, delegation, and so on. Copilot could fulfill simple use cases. However, for more complex or less common cases, Copilot couldn't meet the requirements:

def normalize_content

convert = {"\u309B" => "\u3099", "\u309C" => "\u309A"}

content.gsub(/\u309B|\u309C/, convert).gsub(/\p{L}[\u3099|\u309A]/) do |str|

str.unicode_normalize(:nfkc)

end

end

For example, I had a function like the one above, which was meant to normalize some special characters. However, when writing a test, Copilot provided an example that was unrelated to the logic of the normalize_content function. This might be because Copilot generated tests by "guessing" based on the function's name❓

So, we can draw a few conclusions: - Copilot can generate simple and common tests, which helps us a lot with typing repeated snippets. - For some code with complex logic, Copilot usually doesn't suggest tests, or if it does, it provides incorrect or unrelated code. In such cases, we need to write comments to guide Copilot, break functions into smaller pieces, or write code manually and let Copilot suggest the rest. - We still need to verify the correctness of the generated code.

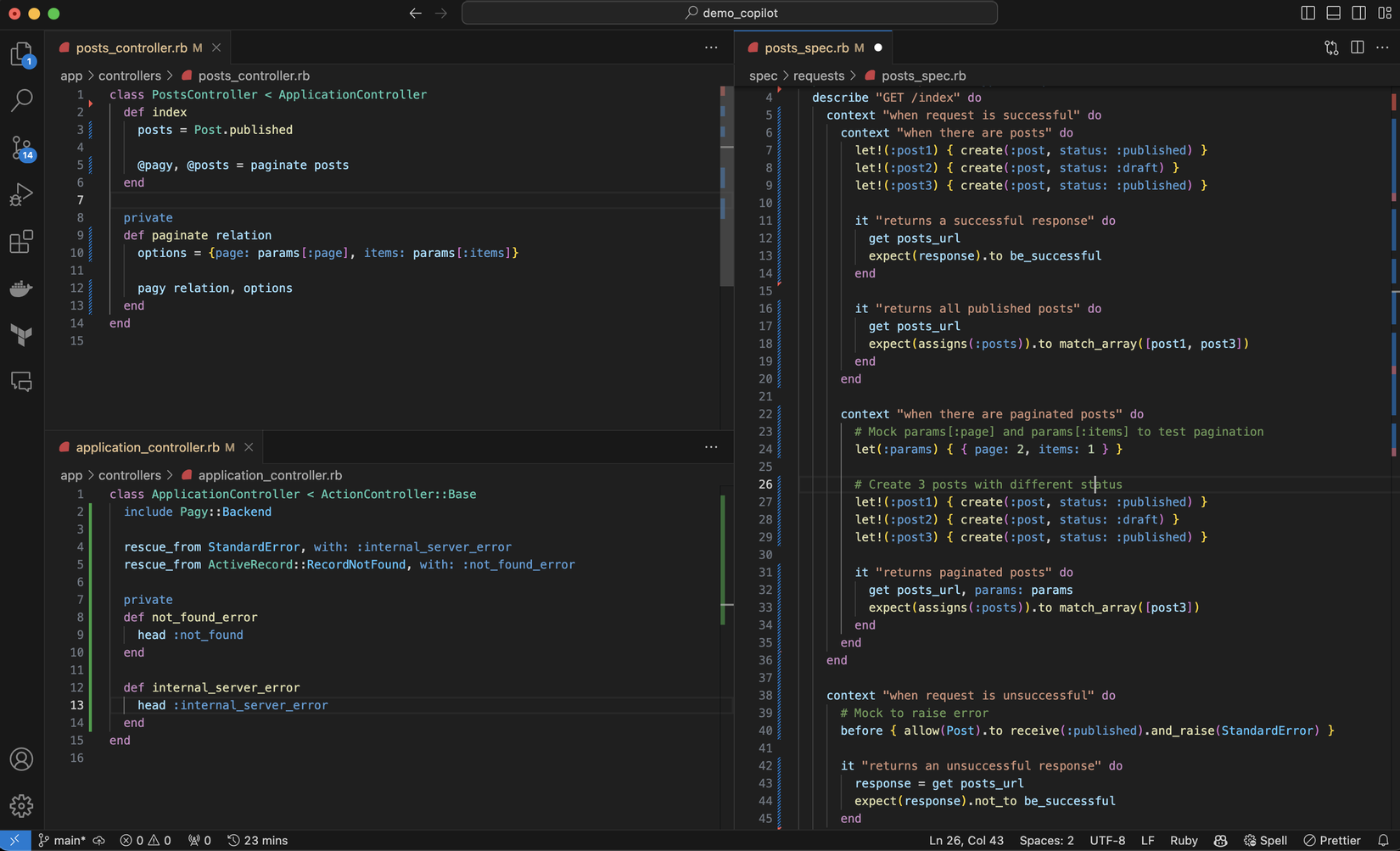

Controller

To demonstrate generating tests for a controller, I tried it with the index function, which retrieves a list of published posts and paginates them.

Initially, due to a lack of context, Copilot only generated simple test cases (checking for a successful request). However, after being guided by my test-writing idea, Copilot caught up very quickly.

- Copilot learns very fast. We only need to provide a "model" once, and it can learn the code style, naming conventions, comments, and even test-writing styles. This significantly helps maintain code consistency.

- When writing tests, Copilot can actively apply Mock/Stub techniques.

I personally find that Copilot provides the best support for writing Controller tests. The reason is that Controller tests follow a common format with a lot of repetition, and this is Copilot's strong suit - handling repeated snippets.

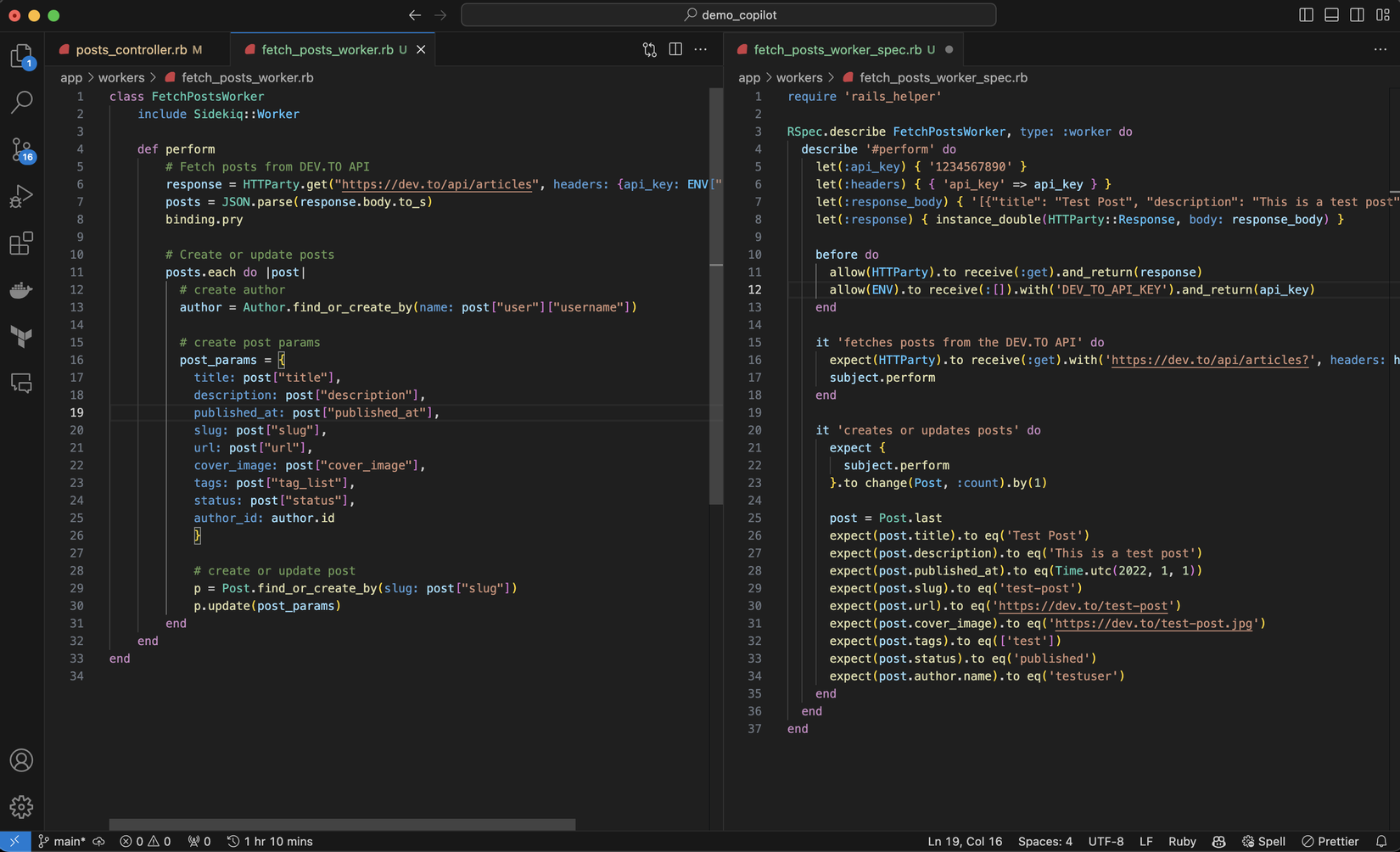

Workers/Services and Others

For Workers and Services, we return to the problem of writing Unit Tests for regular Ruby classes.

Here, I tried writing a piece of code to call dev.to to get the latest post and import it into the database.

The logic is not particularly complex, so Copilot handles it quite well, especially in terms of mocking/stubbing.

Real-Life Project

In real-world scenarios, I often encounter a few issues when using Copilot to generate tests for services, workers, custom libraries, and more:

- If the business logic in these classes is complex, Copilot struggles to generate test cases for the entire file, leading to a lack of test cases and insufficient coverage.

- Copilot generates good tests when I write a class from scratch. However, in cases where I need to fix bugs, update business logic, refactor, or work on existing code, it often creates tests for the entire file rather than focusing on the part I've just modified.

To address these issues, consider the following:

- Break down the logic into smaller functions: Copilot generates better tests for code segments with less complex logic.

- Don't chase Copilot; let Copilot follow you: Fundamentally, Copilot is just a code-writing tool, and the most crucial part is you. So, don't rely solely on Copilot; start writing code, and let Copilot support you.

- Don't trust Copilot 100%, especially when it suggests long sections of code.

Summary

Copilot is proving to be a powerful ally, capable of supporting every step in the coding cycle: breaking down problems, writing unit tests, writing code, creating documentation, refactoring code, and more.

In particular, GitHub is continually developing and improving Copilot every day to introduce new features. Who knows, a few years from now, in addition to TDD and BDD, we might have CDD - Copilot Driven Development.

However, for now, I still hold the belief:

Technology cannot completely replace humans, but those who know how to use technology can replace the others.

So, if you get the chance, give Copilot a try, folks. :v

Key Takeaways

- Copilot provides good support for writing unit tests, especially for simple or repeated code.

- Copilot boosts productivity (I've found it increases productivity by around 40% when writing unit tests), allowing us more time to focus on logic problems rather than typing.

- Sometimes, Copilot may suggest unrelated code (occasionally even code with syntax errors). Therefore, don't rely too heavily on Copilot. Instead, guide it with prompts, double-check the code, and take responsibility for the final results.

References

- Prompting GitHub Copilot Chat to become your personal AI assistant for accessibility

- The pros and cons of using GitHub Copilot for software development (survey results)

- My Week With GitHub Copilot: AI Pair Programming Review

- Using Github Copilot for unit testing

- A Beginner's Guide to Prompt Engineering with GitHub Copilot

- Our Experiment with AI-Powered Dev Tools: The GitHub Copilot Experience of One Company

All rights reserved