Scaling Horizons: Mastering Load Balancing in System Design Concept

Bài đăng này đã không được cập nhật trong 2 năm

1. Introduction to Load Balancing

1.1 What is Load Balancing?

Load balancing is a crucial concept in modern distributed systems. It refers to the process of efficiently distributing incoming network traffic across multiple servers to ensure that no single server is overwhelmed with too much workload. By spreading the load, load balancing helps increase the availability, reliability, and performance of applications or websites, thus ensuring a seamless user experience.

1.2 Why is Load Balancing Important?

As the number of users and the complexity of applications increase, the need for efficient load balancing becomes more critical. Some reasons why load balancing is essential in system design are:

- High Availability: Load balancing ensures that if one server fails, the traffic is automatically redirected to other available servers, ensuring minimal service disruption.

- Scalability: Load balancing allows system designers to add or remove servers to the system depending on the traffic load, thus providing flexibility in handling varying workloads.

- Redundancy: Load balancing provides redundancy by distributing the load across multiple servers, thus preventing a single point of failure in the system.

- Performance: By distributing the workload across multiple servers, load balancing can reduce the response time and improve the overall performance of the system.

2. Load Balancer Components

2.1 Types of Load Balancers

Load balancers can be broadly classified into two categories:

- Hardware Load Balancers: These are dedicated physical devices designed to perform load balancing. They offer high performance and reliability but can be expensive and challenging to scale.

- Software Load Balancers: These are applications or services that run on general-purpose hardware. They are cost-effective, easily scalable, and can be deployed on-premises or in the cloud.

2.2 Load Balancer Algorithms

Load balancers use various algorithms to distribute the incoming traffic across multiple servers. Some common load balancing algorithms are:

- Round Robin: This algorithm distributes the load evenly across all servers in a cyclical order. It is simple to implement but does not account for the varying capabilities of different servers.

- Least Connections: This algorithm assigns requests to the server with the fewest active connections. It is suitable for environments where servers have varying processing capabilities.

- Least Response Time: This algorithm directs traffic to the server with the lowest response time, considering both the number of active connections and the server's latency.

- IP Hash: This algorithm uses the client's IP address to determine the server to which the request should be directed. This approach ensures that a client is consistently directed to the same server, allowing for session persistence.

2.3 Health Checks and Monitoring

Load balancers continuously monitor the health and performance of the servers in the system. They perform regular health checks to ensure that the servers are operational and can handle incoming traffic. If a server is deemed unhealthy, the load balancer stops directing traffic to it and redistributes the load among the remaining healthy servers.

3. Load Balancing Techniques

3.1 Layer 4 Load Balancing

Layer 4 load balancing, also known as transport layer load balancing, operates at the transport layer (TCP/UDP) of the OSI model. It makes routing decisions based on the source and destination IP addresses and ports. Layer 4 load balancing offers high performance and low latency, as it does not analyze the content of the packets. However, it lacks the ability to make decisions based on application-specific data.

3.2 Layer 7 Load Balancing

Layer 7 load balancing, also known as application layer load balancing, operates at the application layer of the OSI model. It makes routing decisions based on the content of the messages, such as HTTP headers, cookies, or data within the message. Layer 7 load balancing offers more advanced routing capabilities, enabling features like content-based routing, URL rewriting, and session persistence. However, it may introduce higher latency due to the additional processing required to analyze the application data.

4. Load Balancing Strategies

4.1 Horizontal Scaling

Horizontal scaling involves adding or removing servers to the system to handle the increase or decrease in traffic load. This approach allows for easy scaling and ensures that the system can accommodate varying workloads. Horizontal scaling can be implemented using various techniques, including auto-scaling groups in cloud environments and container orchestration systems like Kubernetes.

4.2 Vertical Scaling

Vertical scaling involves increasing the resources (CPU, memory, storage) of an existing server to handle the increased workload. While this approach can provide immediate performance improvements, it has limitations in terms of scalability, as there is a maximum threshold to the resources that can be allocated to a single server.

4.3 Hybrid Scaling

Hybrid scaling combines both horizontal and vertical scaling strategies to optimize the performance and scalability of a system. By leveraging the benefits of both approaches, hybrid scaling ensures that the system can adapt to changing workloads and provide consistent performance.

5. Load Balancing in Cloud Environments

Cloud environments offer various managed load balancing services that can be easily integrated into system architectures. Some popular cloud-based load balancing services are:

- Amazon Web Services (AWS) Elastic Load Balancing (ELB): AWS ELB offers both Layer 4 (Network Load Balancer) and Layer 7 (Application Load Balancer) load balancing options. It provides features like automatic scaling, health checks, and integration with other AWS services.

- Google Cloud Load Balancing: Google Cloud offers global and regional load balancing solutions with support for both Layer 4 and Layer 7 load balancing. It also provides advanced features like autoscaling, content-based routing, and SSL offloading.

- Microsoft Azure Load Balancer: Azure Load Balancer supports Layer 4 load balancing and offers features like health probes, session persistence, and integration with Azure Virtual Machines and other Azure services.

6. Conclusion

Load balancing is a critical component of modern distributed systems, ensuring high availability, scalability, and performance. By understanding the various load balancing concepts and techniques, system designers can make informed decisions when designing and implementing load balancing solutions for their applications.

By selecting the appropriate load balancing algorithms, techniques, and strategies, system designers can build robust and resilient systems capable of handling varying workloads and delivering a seamless user experience. With the availability of managed load balancing services in cloud environments, implementing efficient load balancing solutions has become more accessible than ever, allowing businesses to focus on delivering value to their users.

Mình hy vọng bạn thích bài viết này và học thêm được điều gì đó mới.

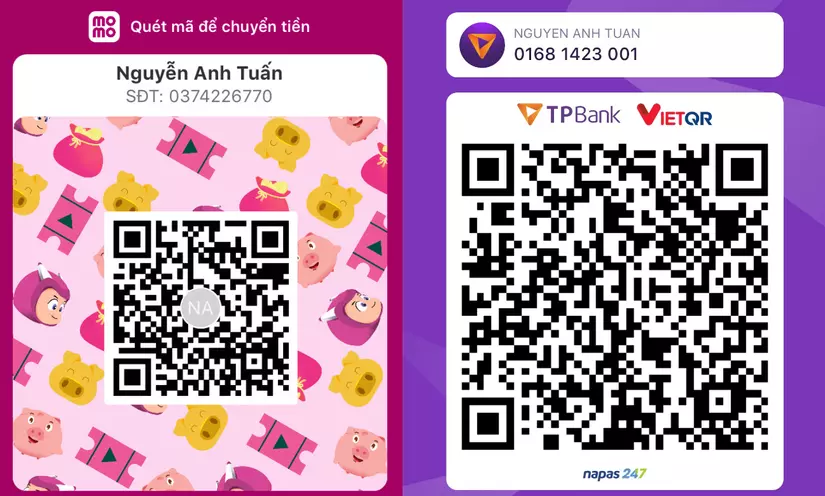

Donate mình một ly cafe hoặc 1 cây bút bi để mình có thêm động lực cho ra nhiều bài viết hay và chất lượng hơn trong tương lai nhé. À mà nếu bạn có bất kỳ câu hỏi nào thì đừng ngại comment hoặc liên hệ mình qua: Zalo - 0374226770 hoặc Facebook. Mình xin cảm ơn.

Momo: NGUYỄN ANH TUẤN - 0374226770

TPBank: NGUYỄN ANH TUẤN - 0374226770 (hoặc 01681423001)

All rights reserved