Implementing multilingual full-text search with fuzziness and highlighting

Bài đăng này đã không được cập nhật trong 4 năm

Introduction

Today I would like to share with you my experience in the implementation of full-text search with the ability to search for misspellings, as well as highlight the coincidence of the text in the results of the query. The task can be implemented in several ways, but the most optimal for today is the use of special search engines that perform the task of full-text search - we will use for this Elasticsearch. In this article I propose to consider the solution of the task by creating a simple minimal schema to work with multilingual content and to search in several languages.

Installing and configuring plugins

Since it was necessary to implement a search through the records in English, Japanese and Vietnamese, I needed to use a few plug-ins that allow to work with content in accordance with the linguistic peculiarities of each of the required languages. If you do not want to use any of these languages, then skip the installation of the plugin. However, in the article I will describe how to set up for all three languages. Before you install the plugins, go to the elasticsearch home directory (information about the location of the home directory will vary depending on your operating system. Refer to the documentation to find out the full path to the required directory):

Plugin for Vietnamese language

sudo bin/plugin install file:https://github.com/duydo/elasticsearch-analysis-vietnamese/files/463094/elasticsearch-analysis-vietnamese-2.4.0.zip

Releases for other versions you can find the plugin on github page

Plugin for the Japanese language

sudo bin/plugin install file:https://download.elastic.co/elasticsearch/release/org/elasticsearch/plugin/analysis-kuromoji/2.4.0/analysis-kuromoji-2.4.0.zip

Documentation page of the plugin

Plugin for extended work with Unicode characters

sudo bin/plugin install file:https://download.elastic.co/elasticsearch/release/org/elasticsearch/plugin/analysis-icu/2.4.0/analysis-icu-2.4.0.zip

Documentation page of the plugin

Please note that the installation of plug-ins for versions 5 and higher is done with the command sudo bin/elasticsearch-plugin install and you must have a compatible version of the plugin and elasticsearch engine until the minor version, that is, for example, are compatible versions 2.4.0 and 2.4.4

Configure indexes based multilanguage

So, I hope the installation of plug-ins do not have problems, but if there are any, then please write in the comments to this article, I will try to help you solve the difficulty.

Moving on. Now we need to specify the settings and the corresponding indexes mapping - a data schema that allows to store data according to a predetermined configuration. The settings are made individually for each index.

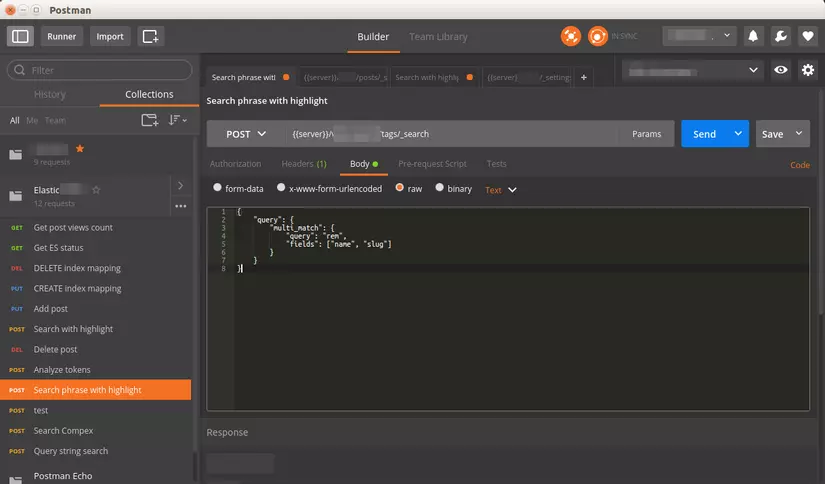

For querying elasticsearch I recommend using the Chrome browser extension - Postman. With this extension you can make requests to the API Elasticsearch using various HTTP methods.

Summing up the results of my research on the storage of multilingual content: it is necessary to store data in different languages in different indices, eg for entries in English created the index and type the address /en/posts, for the Japanese language /ja/posts, and so on.

Firstly, such a data structure will safely update data schema and faster to produce reindexing if necessary.

Secondly, you will not be dependent on the data structures in other types that contain a field with the same name, because you have to comply fully with the typing of fields within the same index. In other words, having the same field in different types, you will need to create this new type of field in a totally identical structure of the field, which sometimes is inconvenient.

Third, you can search for one by one index or several, ie a data structure does not limit you to the possibilities of the implementation of tasks.

Below are the settings for the index entries in the English language:

PUT /en

{

"settings": {

"analysis": {

"filter": {

"english_stemmer": {

"type": "stemmer",

"language": "english"

},

"english_stop_words": {

"type": "stop",

"stopwords": [

"_english_"

]

}

},

"analyzer": {

"en_analyzer": {

"type": "custom",

"tokenizer": "standard",

"filter": [

"lowercase"

],

"char_filter": [

"html_strip"

]

}

}

}

}

}

For Japanese:

PUT /ja

{

"settings": {

"analysis": {

"filter": {

"japanese_stop_words": {

"type": "kuromoji_part_of_speech",

"stoptags": [

"助詞-格助詞-一般",

"助詞-終助詞"

]

}

},

"analyzer": {

"ja_analyzer": {

"type": "custom",

"tokenizer": "kuromoji_tokenizer",

"filter": [

"kuromoji_baseform",

"icu_folding"

],

"char_filter": [

"html_strip",

"icu_normalizer"

]

}

}

}

}

}

For Vietnamese language:

PUT /vi

{

"settings": {

"analysis": {

"analyzer": {

"vi_analyzer": {

"type": "custom",

"tokenizer": "icu_tokenizer",

"filter": [

"lowercase",

"icu_folding"

],

"char_filter": [

"html_strip"

]

}

}

}

}

}

Next, for each of the languages you need to create a new type posts, which will contain the content of records. Below is a small excerpt from the mapping, so you can understand the differences.

Execute via Postman requests as follows:

PUT /en/_mapping/posts

{

"properties": {

"slug": {

"type": "string",

"index": "not_analyzed"

},

"title": {

"type": "string",

"analyzer": "en_analyzer"

},

"contents": {

"type": "string",

"analyzer": "en_analyzer"

},

"status": {

"type": "string",

"index": "not_analyzed",

"include_in_all" : false

},

"created_at": {

"type": "date",

"index": "not_analyzed"

},

"updated_at": {

"type": "date",

"index": "not_analyzed"

}

}

}

PUT /ja/_mapping/posts

{

"properties": {

"slug": {

"type": "string",

"index": "not_analyzed"

},

"title": {

"type": "string",

"analyzer": "ja_analyzer"

},

"contents": {

"type": "string",

"analyzer": "ja_analyzer"

},

"status": {

"type": "string",

"index": "not_analyzed",

"include_in_all" : false

},

"created_at": {

"type": "date",

"index": "not_analyzed"

},

"updated_at": {

"type": "date",

"index": "not_analyzed"

}

}

}

PUT /vi/_mapping/posts

{

"properties": {

"slug": {

"type": "string",

"index": "not_analyzed"

},

"title": {

"type": "string",

"analyzer": "vi_analyzer"

},

"contents": {

"type": "string",

"analyzer": "vi_analyzer"

},

"status": {

"type": "string",

"index": "not_analyzed",

"include_in_all" : false

},

"created_at": {

"type": "date",

"index": "not_analyzed"

},

"updated_at": {

"type": "date",

"index": "not_analyzed"

}

}

}

Indexing (Bulk API)

After you finish creating database schema, you need to index your data from primary storage (it can be a database or any other data source). I recommend you pay attention to the Bulk API, which makes working with complex data, and more importantly, command execution occurs several times faster in comparison with the performance of queries in an individual order. Prepare your data in a file, or use the API for indexing:

POST /en/posts/_bulk

POST /ja/posts/_bulk

POST /vi/posts/_bulk

Documentation page for the Bulk API

Search - an example of a query with highlighting

It is time to perform a search query, which will provide not only the relevant data, but also highlights the results in the response.

GET /en,ja,vi/posts/_search

{

"query": {

"multi_match": {

"query": "something to search",

"fields" : [

"title", "contents"

],

"fuzziness" : "AUTO"

}

},

"highlight" : {

"pre_tags": "<b>",

"post_tags" : "</b>",

"fields" : {

"title": {},

"contents": {}

}

}

}

Documentation for exploring the the details fuzzy search queries

Parameter fuzziness sets fuzziness text search. In my example, it has a value of "AUTO", but you can set your value, but it is not recommended to set big value for the short length of the text, so you'll get absolutely irrelevant results.

Parameter highlight allows you to adjust the framing matches in search results with yours set of tags, and set the fields that you want to highlight, block length in which there is a match, and other parameters.

Also notice the parameter slop for queries by phraze, which allows you to determine the maximum distance scatter words in the search phrase.

You can learn more about the options on the documentation page on the search with highlighting.

Сonclusion

I hope my article will help you begin your journey into the world of search engines and you will be able to contribute to the development of these systems, because it is important to know that in the age of information technology information search is a very valuable opportunity. If you have any questions or appeared difficulty in the implementation example, leave your question in the comments and I am pleased I will add this article and I will share with you my experience.

All rights reserved