Gemini 3 Flash vs Gemini 3 Pro: Price, Speed & Reasoning

Google’s late-2025 Gemini 3 family now contains two distinctly positioned models for developers and power users: Gemini 3 Flash — optimized for raw throughput, low latency, and cost efficiency — and Gemini 3 Pro — optimized for the deepest multimodal reasoning, largest context windows and highest benchmark ceilings. In practical terms, Flash is designed to shift the “productive-flow” frontier for high-frequency developer and interactive applications; Pro is designed to maximize single-query intelligence and handle very large or complex multimodal inputs. The tradeoffs are straightforward and measurable: Flash delivers substantially lower latency and materially lower per-token costs while keeping much of Gemini 3’s reasoning ability; Pro delivers the highest benchmark scores, the most advanced modes (e.g., Deep Think), and larger safety-guarded capabilities at higher cost and latency.

What is Gemini 3 Flash?

(And what problems is it built to solve?)

Gemini 3 Flash is Google’s newest “speed-first” member of the Gemini 3 family. Announced and rolled out in mid-December 2025, Flash is explicitly optimized for low latency, token efficiency and wide accessibility: it became the default model in the Gemini app and AI Mode in Google Search, and is exposed to developers through the Gemini API, Google AI Studio, Vertex AI and the Gemini CLI. The stated design goals are to bring “Pro-grade reasoning” at Flash-level speed and a materially lower price point so high-frequency and interactive use cases (coding assistants, real-time multimodal apps, search’s AI Mode, live CLI interactions) can run at scale.

Core strengths of Flash

- Latency and throughput: engineered for short turn times and high request rates (Google positions it as the fastest Gemini 3 family model).

- Token efficiency: Google claims Flash uses fewer tokens for equivalent tasks versus prior Flash/Pro generations, reducing per-request cost.

- Multimodal and agentic capability: despite being “lightweight,” Flash retains Gemini 3’s multimodal reasoning (text, image, audio, video) and supports agentic tool-calling.

What is Gemini 3 Pro?

Gemini 3 Pro is Google’s flagship “depth-first” model in the Gemini 3 family. It’s positioned for the hardest reasoning workloads: deep research, complex long-horizon planning, multi-step agentic workflows, large codebases, and tasks where the last bit of accuracy or reliability materially matters. Pro emphasizes reasoning fidelity, tool integration (streaming function calls, robust tool-calling), and very large context windows (Google advertises high-token tiers for Pro). Pro is available to paying subscribers (Google AI Pro / Ultra tiers) and via enterprise APIs.

Core strengths of Pro

- Reasoning depth and stability: tuned for multi-stage reasoning and lower failure modes on complex benchmarks.

- Large context support: targeted at workflows that need very long context windows (multi-document synthesis, entire repositories, big PDFs).

- Enterprise features and tool-calling: richer support for different tool patterns, grounding and retrieval integrations for production agentic systems.

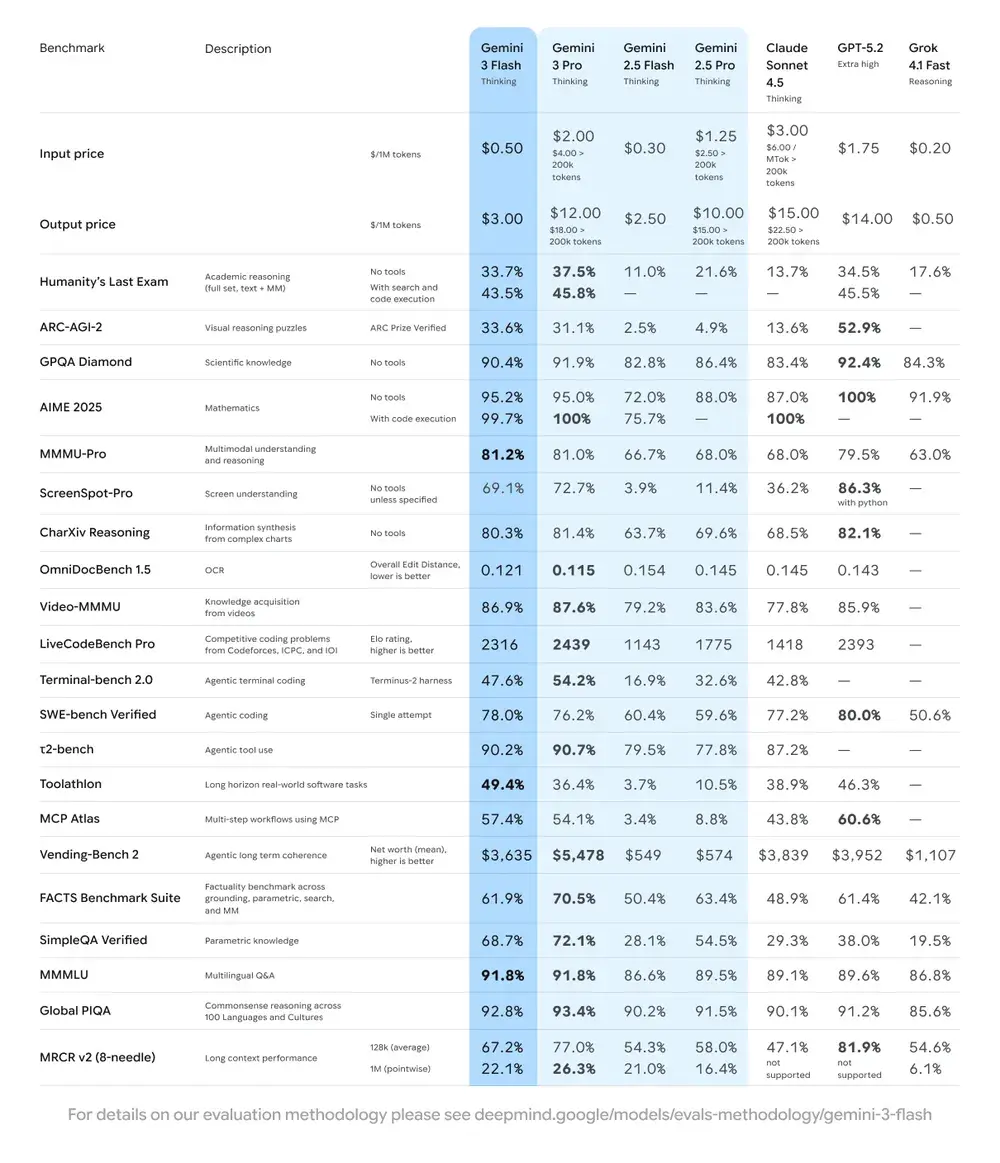

How do Gemini 3 Flash and Gemini 3 Pro perform on benchmarks?

Flash performs exceptionally well for many real-world developer/agentic tasks (often closing the gap on Pro), and in some coding benchmarks even surpasses Pro — while Pro remains the go-to for the hardest reasoning and long-context synthesis tasks.

Benchmarks where Pro leads

- GPQA Diamond (graduate science): Pro ≈ 91.9% (rising to ≈ 93.8% with Deep Think in some runs), demonstrating top performance on graduate-level scientific question sets.

- Terminal-Bench 2.0 (agentic terminal tasks): Pro: 54.2% — a clear lead on tool-use/terminal operation tests compared to earlier models and many peers. This is a key indicator for agentic code / terminal automations.

- ARC-AGI-2 (abstract visual reasoning): Pro shows meaningful improvements over earlier Gemini versions (e.g., Pro 31.1% vs prior 4.9% in older models; Deep Think further raises this). These are large relative gains, even if absolute percentages remain modest for the hardest tasks.

Benchmarks where Flash excels or competes well

- GPQA / MMMU / practical tasks: Early reports show Flash producing very high GPQA-style scores in many runs (reports list GPQA Diamond ≈ 90.4% and MMMU Pro ≈ 81.2% in press coverage), demonstrating that Flash approaches Pro-level accuracy on a wide set of tasks while being far faster and cheaper.

- Coding and short tasks: Flash can be faster and sometimes even outperform Pro on quick, single-turn coding or short evaluation tasks because of lower latency and token efficiency; Flash scoring higher on selected coding tests while costing far less per run. These community results are early and vary by test harness.

What the numbers mean for reasoning depth

- Absolute ceilings: Gemini 3 Pro still sets the highest ceilings on the most difficult benchmarks (e.g., LMArena Elo, Humanity’s Last Exam with Deep Think). This means if you require the last bit of accuracy on the hardest problems (PhD-level research, novel scientific reasoning, maximum math accuracy), Pro is the safer choice.

- Pareto efficiency: Gemini 3 Flash closes the gap on many practical tasks (QA, coding, multimodal extraction) while delivering large speed/cost gains. For many production tasks that prioritize responsiveness and throughput, Flash represents a better cost-performance tradeoff.

- Score ≠ universal superiority. Benchmarks capture behavior on curated tasks. Flash’s excellent SWE-bench/coding numbers show it’s optimized for structured, agentic tasks and likely benefits from architecture and decoding defaults that match common coding workloads.

- Latency and cost change the practical tradeoff. If a model is slightly better on absolute accuracy but 3× slower and 6× more expensive to run, Flash often becomes the smart choice for production systems where responsiveness and cost matter. Gemini 3Flash being roughly 3× faster than an earlier Gemini 2.5 Pro baseline while maintaining high reasoning quality.

Gemini 3 Flash vs Gemini 3 Pro: Pricing and specifications

Model technical summary

- Context window (input): Both Gemini 3 Pro and Gemini 3 Flash are published with up to 1,000,000 token input context windows; Pro additionally advertises 64k output and specialized image variants with their own windows. (Note: real-world web UI behavior and rate limits may differ across products; see "Caveats" below.)

- Multimodal inputs supported: text, images, audio, video, and PDFs for both Pro and Flash (with image/video capabilities exposed via Google AI Studio / API / Vertex).

- Special modes: Pro supports Deep Think and Pro-only agentic features (Google Antigravity / tooling) and is used for higher-safety workloads. Flash supports configurable reasoning levels and structured outputs but is optimized for lower latency and cost.

Developer/API pricing (published developer pricing tiers — per 1M tokens)

(Values below are drawn from Google’s Gemini API / model docs published for the Gemini 3 family. They reflect the published preview prices per 1M tokens for input/output; consult billing for the exact production rates you will be charged.)

gemini-3-flash-preview (Flash):

- Input: $0.50 per 1M tokens

- Output: $3.00 per 1M tokens.

gemini-3-pro-preview (Pro)

- Tier A (<200k tokens context):

2 /12 per 1M tokens (input / output) - Tier B (>200k tokens context or heavy contexts):

4 /18 per 1M tokens — pricing scales upward for very large contexts.

Practical meaning: for equivalent token usage in the common (<200k tokens) band, Flash costs roughly 4× less per token on input and 4× less on output than Pro in the published preview pricing. For large (>200k) contexts, Pro’s costs can be materially higher.

CometAPI provides API access to Gemini 3 Flash and Gemini 3 Pro, and the API price is discounted.

Consumer / subscription pricing (Gemini app / Google AI plans)

Google AI Pro (the consumer/power tier that unlocks Gemini 3 Pro features in the Gemini app and workspace integration) is published at $19.99 per month (availability and local currency conversions apply). Google also offers higher-limits "AI Ultra" tiers at a much higher monthly cost for enterprise-grade access

Gemini 3 Flash vs Gemini 3 Pro: reasoning and multimodal understanding

Reasoning depth: Pro vs Flash

Gemini 3 Pro is consistently presented as the deeper reasoning model. On graduate-level science benchmarks (GPQA Diamond) and agentic tool-use benchmarks (Terminal-Bench 2.0), Pro scores at or near state-of-the-art levels (e.g., GPQA Diamond ≈ 91.9% for Pro with Deep Think improvements to 93.8% on some runs). Those numbers place Pro ahead of many competitors on complex, domain-specific tasks.

Agentic, coding and multimodal synthesis: Gemini 3 Flash’s architectural choices and tuning allow it to perform surprisingly well on some coding and structured-reasoning benchmarks, and in many real tasks the user-visible difference versus Pro is small — especially when “thinking level” API controls are tuned. Independent early tests and press coverage show Gemini 3 Flash matching or exceeding Pro on selected agentic coding benchmarks. But that does not imply Gemini 3 Flash matches Gemini 3 Pro across every long-form research or high-ambiguity reasoning scenario.

Flash, by contrast, is optimized to balance quality and speed. Gemini 3 Flash as delivering high reasoning for the majority of everyday tasks while not matching Pro’s top-end performance on the hardest academic or multi-step problems. The trade-off is explicit: faster responses at slightly shallower chains of reasoning.

Multimodal performance (images/video/audio)

Both Flash and Pro in the Gemini 3 family support multimodal inputs (images, video, audio). Gemini 3 Flash supports very large numbers of images per prompt (up to 900 images per prompt depending on context), file size limits for inline uploads (e.g., 7 MB per file inline, up to 30 MB from Cloud Storage for some deployments), and explicit MIME/type/resolution limits, indicating that Flash’s multimodal interface is production-grade and intended for heavy use. Gemini 3 Pro’s multimodal strengths appear in benchmarks requiring visual reasoning and integrating tools for code/terminal execution. For the most complex visual reasoning tasks, Gemini 3 Pro maintains an edge; for high-throughput multimedia summarization and straightforward vision tasks, Flash can be more cost-effective and faster.

Example benchmark contrasts

Visual reasoning (ARC-AGI-2): Gemini 3 Pro shows large gains vs Gemini 2.5 Pro and outperforms many peers, a signal that Pro’s architecture improvements specifically lift abstract visual reasoning. Gemini 3 Flash scores well on practical multimodal tasks but does not match Pro on the very hardest visual puzzle benchmarks.

How do they compare on raw speed — is Gemini 3 Flash really faster?

Gemini 3 Flash can deliver up to ~3× the throughput / lower latency compared with prior Flash/Pro baselines (statements generally compare Flash to Gemini 2.5 Pro or previous generation Pro models). That speed advantage is the central selling point of Gemini 3 Flash: give developers “Pro-grade” answers at Flash latency. Gemini 3 Flash frequently outperforming Pro on throughput-sensitive tasks (e.g., short coding prompts, chat turn latency) while still scoring competitively on many benchmarks that measure accuracy per unit time.

Tokens, “thinking” tokens and caching

Google differentiates input tokens (what you send), output tokens (what the model returns, including internal “thinking” tokens in some modes) and context caching costs. Flash is optimized to use fewer thinking tokens for many tasks ( ~30% fewer than 2.5 Pro for comparable tasks), which reduces effective cost per resolved request in many practical scenarios. Pro’s pricing and token usage reflect deeper internal reasoning passes that can increase token usage and cost, especially for very large contexts.

How to interpret “faster” in practice

Interactive chat: Gemini 3 Flash will feel snappier; use it for conversational UIs where user experience depends on sub-second responses.

Large, compute-heavy jobs: For long, compute-heavy chains of thought where thinking tokens accumulate, Gemini 3 Pro’s deeper reasoning may require more compute and thus higher latency. In some agentic scenarios Pro’s internal extra passes (e.g., Deep Think modes) may purposely take longer to reach higher-quality answers.

What are real-world use-cases and recommendations?

Pick Gemini 3 Flash if you need:

- High-throughput, low-latency interactive chat (consumer apps, support bots, conversational search).

- Cheap, fast multimodal summarization (video, image sets) where response speed and throughput matter more than the absolute top tier of multi-step reasoning.

- Bulk A/B testing, in-product assistants, and coding autocomplete where short iterations per call dominate.

Pick Gemini 3 Pro if you need:

- Cutting-edge scientific Q&A, math/physics problem solving where graduate-level reliability is required.

- Agentic systems that must operate terminals, perform tooling steps, run and debug code, or orchestrate multi-step toolchains (Pro’s Terminal-Bench strengths matter here).

- Workloads where the incremental improvement in accuracy or non-verbal reasoning is worth the increased token cost and latency.

Hybrid deployment pattern (practical best practice)

Many production teams adopt dual-model strategies:

- Front door = Gemini 3 Flash: serve most interactive users with Flash for responsiveness and cost control.

- Escalate = Pro: route long-form research requests, specialized agent runs or “escalations” to Pro, possibly after an initial Flash pass has scoped the problem. This pattern balances cost, latency and accuracy.

Conclusion

Gemini 3 Flash and Gemini 3 Pro are not simply “faster vs. smarter” in a pure binary sense — they are engineered tradeoffs on the speed/latency, cost, and reasoning axes. Flash advances the practical frontier for interactive, high-throughput workloads by offering much of Gemini 3’s reasoning capability at a fraction of the cost and latency; Pro preserves and extends Gemini’s research-grade reasoning ceiling, multimodal fidelity, and enterprise

All rights reserved