Gateway vs Direct API: Their Respective Use Cases

What Does LLM Gateway Actually Do? LLM Gateway serves as an intermediary layer that centralises decision-making processes which applications would otherwise need to perform repeatedly.

In practice, the Gateway typically handles:

Cross-vendor behaviour standardisation Routing, fallback, and model selection logic Prompt and policy execution Usage tracking and cost attribution Observability, auditing, and safeguards The key distinction lies not in capability, but in intent.

The Gateway is designed as infrastructure. Direct APIs are consumed as dependencies.

Core trade-off: Simplicity vs Centralised Control The decision between direct APIs and gateways is not mutually exclusive. It represents a trade-off between local simplicity and system-level control. Direct APIs optimise for: Minimised abstraction Lower initial complexity Faster iteration in small teams Gateways optimise for: Cross-service consistency Explicit reliability guarantees Centralised cost and policy control Neither approach is inherently superior. Under the wrong conditions, each can become costly.

When Direct APIs Are Typically the Right Choice Direct integration generally makes sense when:

You rely on a single vendor or model lineage A single team owns the entire LLM surface Failures are non-critical or easily tolerated Cost tracking does not require granularity Prompt changes are localised and infrequent In these scenarios, the overhead introduced by adding a Gateway may outweigh its value. Premature abstraction slows teams down.

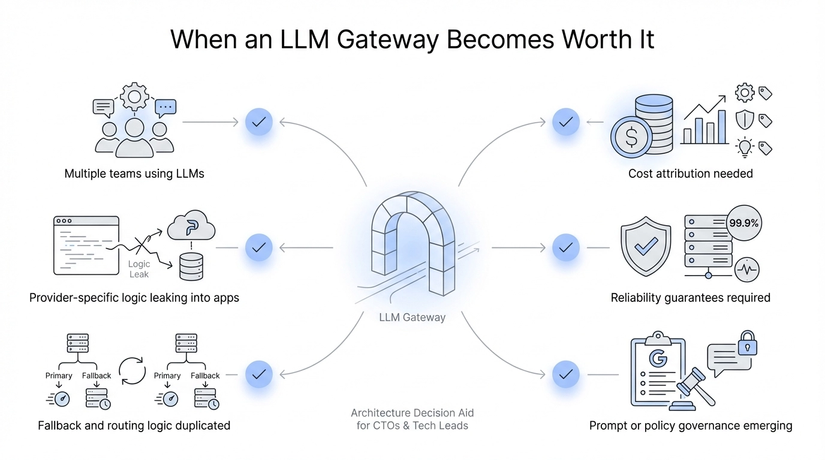

When does Gateway become cost-effective? When complexity exceeds certain thresholds, Gateway often demonstrates its value. Common indicators include: Multiple teams or services relying on LLM Vendor-specific behaviour leaking into product code Duplication of routing or fallback logic Prompt governance or policy enforcement becomes necessary Costs or usage require attribution beyond "overall expenditure" Reliability guarantees become critical At this point, the Gateway ceases to be "additional infrastructure". It becomes a means of reducing coordination and cognitive burden.

Decision Checklist If you answer "yes" to three or more questions, a Gateway warrants evaluation:

Do multiple services implement similar retry or degradation logic? Has vendor-specific behaviour leaked into application code? Do prompt or policy changes require coordinated deployment? Is cost attribution needed at feature or team level? Could vendor outages impact multiple critical paths? If not, direct APIs may remain the simpler—and better—option.

Once the Gateway begins to make sense, the more difficult decision becomes which abstraction strategy suits your constraints—managed routing, self-hosted proxies, in-house development, or managed services. https://evolink.ai/blog/openrouter-vs-litellm-vs-build-vs-managed

Common Anti-Patterns Teams frequently struggle in the following scenarios:

Introducing a Gateway without clear ownership Treating the Gateway as a "thin proxy" while expecting infrastructure-level guarantees Dynamically routing traffic without establishing performance benchmarks Centralising control without adding observability Gateways amplify both good and bad design decisions.

The connection to Wrappers Most Gateways do not emerge fully formed.

They evolve from Wrappers that:

Accumulate retry and routing logic Centralise prompts and policies Become dependencies for multiple teams The distinction lies in intentional design.

Wrappers emerge passively. Gateways are purposefully built.

Conclusion Direct APIs are not shortcuts. Gateways are not over-engineering.

They are tools optimised for different stages of system complexity.

The true error lies not in choosing wrongly—but in failing to recognise when trade-offs have shifted.

Understanding this inflection point is key to transforming LLM integration from temporary code into sustainable infrastructure.

All rights reserved